-

-

-

-

- -

-

-

-We propose a simple yet effective Relation Graph augmented Learning RGL method that can obtain better performance in few-shot natural language understanding tasks.

-

-RGL constructs a relation graph based on the label consistency between samples in the same batch, and learns to solve the resultant node classification and link prediction problems of the relation graphs. In this way, RGL fully exploits the limited supervised information, which can boost the tuning effectiveness.

-

-# Prepare the data

-

-We evaluate on the GLUE variant for few-shot learning in the paper, including SST-2, SST-5, MR, CR, MPQA, Subj, TREC, CoLA, MNLI, MNLI-mm, SNLI, QNLI, RTE, MRPC, QQP and STS-B. Please download the [datasets](https://paddlenlp.bj.bcebos.com/datasets/k-shot-glue/rgl-k-shot.zip) and extract the data files to the path ``./data/k-shot``.

-

-

-# Experiments

-

-The structure of the code:

-

-```

-├── scripts/

-│ ├── run_pet.sh # Script for PET

-│ └── run_rgl.sh # Script for RGL

-├── template.py # The parser for prompt template

-├── verbalizer.py # The mapping from labels to corresponding words

-├── tokenizer.py # The tokenizer wrapeer to conduct text truncation

-├── utils.py # The tools

-└── rgl.py # The training process of RGL

-```

-

-## How to define a template

-

-We inspire from [OpenPrompt](https://github.com/thunlp/OpenPrompt/tree/main) and define template as a list of dictionary. The key of raw texts in datasets is `text`, and the corresponding value is the keyword of text in loaded dataset, where we use `text_a` to denote the first sentence in every example and `text_b` to denote the other sentences by default.

-

-For example, given the template ``{'text':'text_a'} It was {'mask'}.`` and a sample text ``nothing happens , and it happens to flat characters .`` the input text will be ``nothing happens , and it happens to flat characters . It was

-

-

-

-

| 任务 | -英文模型 | -中文模型 | -||||

| 规模 | -证据平均长度比例 | -证据平均数量 | -规模 | -证据平均长度比例 | -证据平均数量 | -|

| 情感分析 | -1,499 | -19.20% | -2.1 | -1,646 | -30.10% | -1.4 | -

| 相似度任务 | -1,659 | -52.20% | -1.0 | -1,629 | -70.50% | -1.0 | -

| 阅读理解 | -1,507 | -10.20% | -1.0 | -1,762 | -9.60% | -1.0 | -

- -

-

-

- -__一致性__:评估(原始输入,对应扰动输入)对中词重要度排序的一致性。证据分析方法对输入中每个词赋予一个重要度,基于该重要度对输入中所有词进行排序。我们使用搜索排序中的MAP(mean average precision)指标来计算两个排序的一致性。这里给出了MAP的两种计算方式,分别见以下两个公式:

-公式一(正在使用):

-

-

-

-

-

-

- -__忠诚性__:评估模型给出的证据的忠诚性,即模型是否真的基于给出的证据进行预测的。这里从充分性和完备性两个角度进行评估。充分性,即模型给出的证据是否包含了预测需要的全部信息(即yri = yxi,其中ri表示输入xi的证据,yx表示模型对输入x的预测结果);完备性,即模型对输入x的预测结果(即yxi\ri ≠ yxi,其中xi\ri表示从输入xi中去除证据ri)。基于这两个维度,我们提出了一个新的指标New-P,计算方式如下:

- -

-

-

-

-

- -Attention-based([Jain and Wallace, 2019](https://arxiv.org/pdf/1902.10186.pdf)): - - 将注意力分数作为词重要度。注意力分数的获取取决于具体模型架构,我们提供了基于LSTM和transformer框架的提取方法,见每个具体任务下的saliency_map目录。 - -Gradient-based([Sundararajan et al., 2017](https://arxiv.org/pdf/1703.01365.pdf)): - - 基于梯度给出每个词重要度。我们这里给出了integrated gradient计算方式,具体见saliency_map目录或论文[Axiomatic attribution for deep networks](https://arxiv.org/pdf/1703.01365.pdf)。 - -Linear-based([Ribeiro et al.. 2016](https://arxiv.org/pdf/1602.04938.pdf)): - - 使用线性模型局部模拟待验证模型,线性模型学习到的词的权重作为该词对预测结果的重要度,详细见论文[" why should i trust you?" explaining the predictions of any classifier](https://arxiv.org/pdf/1602.04938.pdf)。 - -### 三个任务的被评估模型 -为验证模型复杂度、参数规模对可解释的影响,针对每个任务,我们分别提供了基于LSTM(简单结构)的模型、及Transformer-based预训练模型(复杂结构),其中,对于预训练模型,提供了base版本和large版本。

-模型代码位置:/model_interpretation/task/{task}/,({task}可取值为["senti","similarity","mrc"],其中senti代表情感分析,similarity代表相似度计算,mrc代表阅读理解)

-模型运行及依赖环境请参考下方的“平台使用”。 - - -## 平台使用 -### 环境准备 -代码运行需要 Linux 主机,Python 3.8(推荐,其他低版本未测试过) 和 PaddlePaddle 2.1 以上版本。 - -### 推荐的环境 - -* 操作系统 CentOS 7.5 -* Python 3.8.12 -* PaddlePaddle 2.1.0 -* PaddleNLP 2.2.4 - -除此之外,需要使用支持 GPU 的硬件环境。 - -### PaddlePaddle - -需要安装GPU版的PaddlePaddle。 - -``` -# GPU 版本 -pip3 install paddlepaddle-gpu -``` - -更多关于 PaddlePaddle 的安装教程、使用方法等请参考[官方文档](https://www.paddlepaddle.org.cn/#quick-start). - -### 第三方 Python 库 -除 PaddlePaddle 及其依赖之外,还依赖其它第三方 Python 库,位于代码根目录的 requirements.txt 文件中。 - -可使用 pip 一键安装 - -```pip3 install -r requirements.txt``` - -## 数据准备 -### 模型训练数据 -#### 情感分析任务: - -中文推荐使用ChnSentiCorp,英文推荐使用SST-2。本模块提供的中英文情感分析模型就是基于这两个数据集的。若修改训练数据集,请修改/model_interpretation/task/senti/pretrained_models/train.py (RoBERTa) 以及 /model_interpretation/task/senti/rnn/train.py (LSTM)。 - -[//]:数据集会被缓存到/home/work/.paddlenlp/datasets/目录下 - -#### 相似度计算: - -中文推荐使用LCQMC,英文推荐使用QQP。本模块提供的中英文相似度计算模型就是基于这两个数据集的,若修改训练数据集,请修改/model_interpretation/task/similarity/pretrained_models/train_pointwise.py(RoBERTa)以及/model_interpretation/task/similarity/simnet/train.py(LSTM)。 - -#### 阅读理解中英文: - -中文推荐使用[DuReader_Checklist](https://dataset-bj.cdn.bcebos.com/lic2021/dureader_checklist.dataset.tar.gz),英文推荐使用[SQUDA2](https://rajpurkar.github.io/SQuAD-explorer/dataset/train-v2.0.json)。请将阅读理解训练数据放置在/model_interpretation/task/mrc/data目录下。 - -### 下载预训练模型 - -使用paddlenlp框架自动缓存模型文件。 - -### 其他数据下载 -请运行download.sh自动下载 - -### 评测数据 -评测数据样例位于/model_interpretation/data/目录下,每一行为一条JSON格式的数据。 -#### 情感分析数据格式: - id: 数据的编号,作为该条数据识别key; - context:原文本数据; - sent_token:原文本数据的标准分词,注意:golden证据是基于该分词的,预测证据也需要与该分词对应; - sample_type: 数据的类性,分为原始数据(ori)和扰动数据(disturb); - rel_ids:与原始数据关联的扰动数据的id列表(只有原始数据有); - -#### 相似度数据格式: - id:数据的编号,作为该条数据识别key; - query(英文中为sentence1):句子1的原文本数据; - title(英文中为sentence2):句子2的原文本数据; - text_q_seg:句子1的标准分词,注意:golden证据是基于该分词的,预测证据也需要与该分词对应; - text_t_seg:句子2的标准分词,注意:golden证据是基于该分词的,预测证据也需要与该分词对应; - sample_type: 数据的类性,分为原始数据(ori)和扰动数据(disturb); - rel_ids:与原始数据关联的扰动数据的id列表(只有原始数据有); - -#### 阅读理解数据格式: - id:数据的编号,作为该条数据识别key; - title:文章标题; - context:文章主体; - question:文章的问题; - sent_token:原文本数据的标准分词,注意:golden证据是基于该分词的,预测证据也需要与该分词对应; - sample_type: 数据的类性,分为原始数据(ori)和扰动数据(disturb); - rel_ids:与原始数据关联的扰动数据的id列表(只有原始数据有); -## 模型运行 -### 模型预测: - - model_interpretation/task/{task}/run_inter_all.sh (生成所有结果) - model_interpretation/task/{task}/run_inter.sh (生成单个配置的结果,配置可以选择不同的评估模型,以及不同的证据抽取方法、语言) - -(注:{task}可取值为["senti","similarity","mrc"],其中senti代表情感分析,similarity代表相似度计算,mrc代表阅读理解) - -### 证据抽取: - cd model_interpretation/rationale_extraction - ./generate.sh - -### 可解释评估: -#### 合理性(plausibility): - model_interpretation/evaluation/plausibility/run_f1.sh -#### 一致性(consistency): - model_interpretation/evaluation/consistency/run_map.sh -#### 忠诚性(faithfulness): - model_interpretation/evaluation/faithfulness/run_newp.sh - -### 评估报告 -中文情感分析评估报告样例: -

| 模型 + 证据抽取方法 | -情感分析 | -|||

| Acc | -Macro-F1 | -MAP | -New_P | -|

| LSTM + IG | -56.8 | -36.8 | -59.8 | -91.4 | -

| RoBERTa-base + IG | -62.4 | -36.4 | -48.7 | -48.9 | -

| RoBERTa-large + IG | -65.3 | -38.3 | -41.9 | -37.8 | -

-

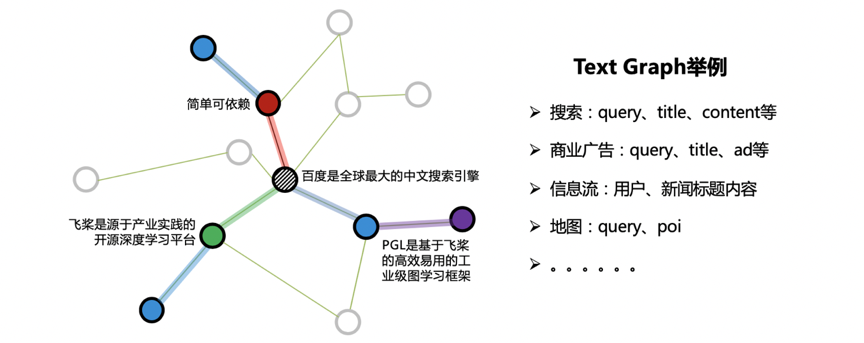

-**ErnieSage** 由飞桨PGL团队提出,是ERNIE SAmple aggreGatE的简称,该模型可以同时建模文本语义与图结构信息,有效提升 Text Graph 的应用效果。其中 [**ERNIE**](https://github.com/PaddlePaddle/ERNIE) 是百度推出的基于知识增强的持续学习语义理解框架。

-

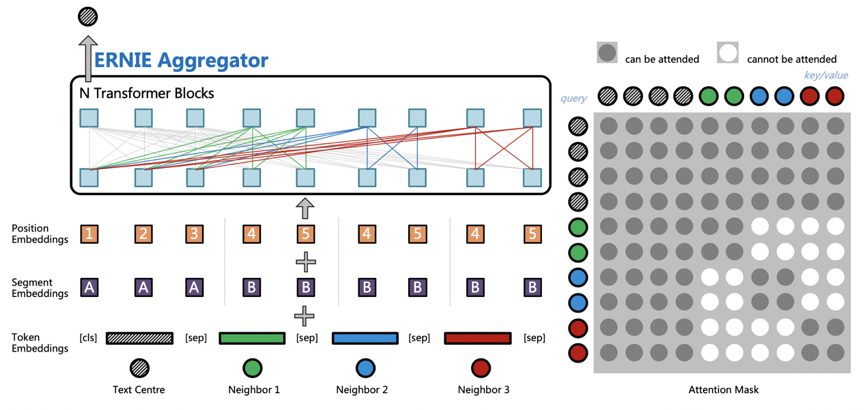

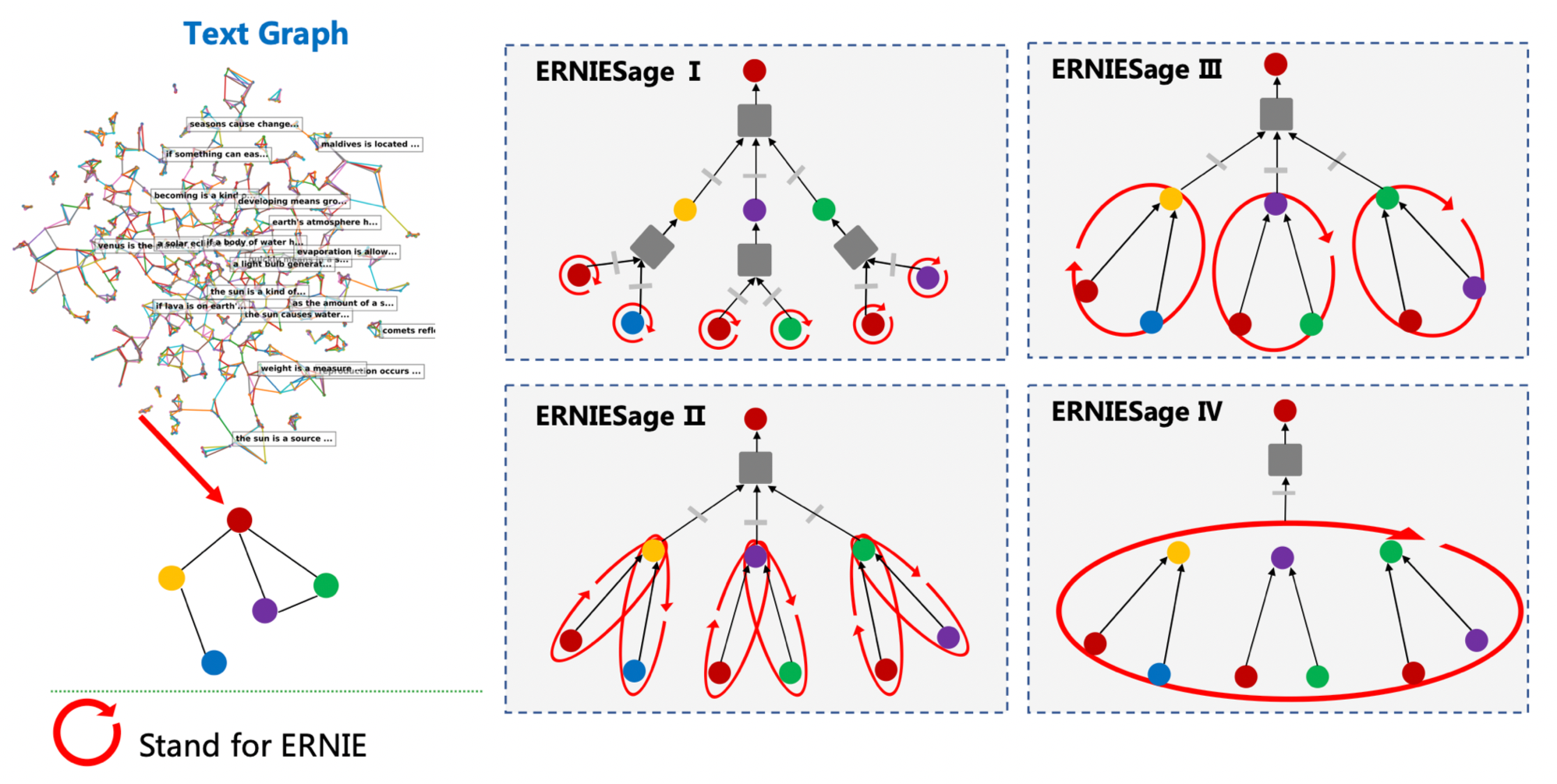

-**ErnieSage** 是 ERNIE 与 GraphSAGE 碰撞的结果,是 ERNIE SAmple aggreGatE 的简称,它的结构如下图所示,主要思想是通过 ERNIE 作为聚合函数(Aggregators),建模自身节点和邻居节点的语义与结构关系。ErnieSage 对于文本的建模是构建在邻居聚合的阶段,中心节点文本会与所有邻居节点文本进行拼接;然后通过预训练的 ERNIE 模型进行消息汇聚,捕捉中心节点以及邻居节点之间的相互关系;最后使用 ErnieSage 搭配独特的邻居互相看不见的 Attention Mask 和独立的 Position Embedding 体系,就可以轻松构建 TextGraph 中句子之间以及词之间的关系。

-

-

-

-**ErnieSage** 由飞桨PGL团队提出,是ERNIE SAmple aggreGatE的简称,该模型可以同时建模文本语义与图结构信息,有效提升 Text Graph 的应用效果。其中 [**ERNIE**](https://github.com/PaddlePaddle/ERNIE) 是百度推出的基于知识增强的持续学习语义理解框架。

-

-**ErnieSage** 是 ERNIE 与 GraphSAGE 碰撞的结果,是 ERNIE SAmple aggreGatE 的简称,它的结构如下图所示,主要思想是通过 ERNIE 作为聚合函数(Aggregators),建模自身节点和邻居节点的语义与结构关系。ErnieSage 对于文本的建模是构建在邻居聚合的阶段,中心节点文本会与所有邻居节点文本进行拼接;然后通过预训练的 ERNIE 模型进行消息汇聚,捕捉中心节点以及邻居节点之间的相互关系;最后使用 ErnieSage 搭配独特的邻居互相看不见的 Attention Mask 和独立的 Position Embedding 体系,就可以轻松构建 TextGraph 中句子之间以及词之间的关系。

-

- -

-使用ID特征的GraphSAGE只能够建模图的结构信息,而单独的ERNIE只能处理文本信息。通过飞桨PGL搭建的图与文本的桥梁,**ErnieSage**能够很简单的把GraphSAGE以及ERNIE的优点结合一起。以下面TextGraph的场景,**ErnieSage**的效果能够比单独的ERNIE以及GraphSAGE模型都要好。

-

-**ErnieSage**可以很轻松地在基于PaddleNLP构建基于Ernie的图神经网络,目前PaddleNLP提供了V2版本的ErnieSage模型:

-

-- **ErnieSage V2**: ERNIE 作用在text graph的边上;

-

-

-

-使用ID特征的GraphSAGE只能够建模图的结构信息,而单独的ERNIE只能处理文本信息。通过飞桨PGL搭建的图与文本的桥梁,**ErnieSage**能够很简单的把GraphSAGE以及ERNIE的优点结合一起。以下面TextGraph的场景,**ErnieSage**的效果能够比单独的ERNIE以及GraphSAGE模型都要好。

-

-**ErnieSage**可以很轻松地在基于PaddleNLP构建基于Ernie的图神经网络,目前PaddleNLP提供了V2版本的ErnieSage模型:

-

-- **ErnieSage V2**: ERNIE 作用在text graph的边上;

-

- -

-## 环境依赖

-

-- pgl >= 2.1

-安装命令 `pip install pgl\>=2.1`

-

-## 数据准备

-示例数据```data.txt```中使用了NLPCC2016-DBQA的部分数据,格式为每行"query \t answer"。

-```text

-NLPCC2016-DBQA 是由国际自然语言处理和中文计算会议 NLPCC 于 2016 年举办的评测任务,其目标是从候选中找到合适的文档作为问题的答案。[链接: http://tcci.ccf.org.cn/conference/2016/dldoc/evagline2.pdf]

-```

-

-## 如何运行

-

-我们采用了[PaddlePaddle Fleet](https://github.com/PaddlePaddle/Fleet)作为我们的分布式训练框架,在```config/*.yaml```中,目前支持的[ERNIE](https://github.com/PaddlePaddle/ERNIE)预训练语义模型包括**ernie**以及**ernie_tiny**,通过config/erniesage_link_prediction.yaml中的ernie_name指定。

-

-

-```sh

-# 数据预处理,建图

-python ./preprocessing/dump_graph.py --conf ./config/erniesage_link_prediction.yaml

-# GPU多卡或单卡模式ErnieSage

-python -m paddle.distributed.launch --gpus "0" link_prediction.py --conf ./config/erniesage_link_prediction.yaml

-# 对图节点的embeding进行预测, 单卡或多卡

-python -m paddle.distributed.launch --gpus "0" link_prediction.py --conf ./config/erniesage_link_prediction.yaml --do_predict

-```

-

-## 超参数设置

-

-- epochs: 训练的轮数

-- graph_data: 训练模型时用到的图结构数据,使用“text1 \t text"格式。

-- train_data: 训练时的边,与graph_data格式相同,一般可以直接用graph_data。

-- graph_work_path: 临时存储graph数据中间文件的目录。

-- samples: 采样邻居数

-- model_type: 模型类型,包括ErnieSageV2。

-- ernie_name: 热启模型类型,支持“ernie”和"ernie_tiny",后者速度更快,指定该参数后会自动从服务器下载预训练模型文件。

-- num_layers: 图神经网络层数。

-- hidden_size: 隐藏层大小。

-- batch_size: 训练时的batchsize。

-- infer_batch_size: 预测时batchsize。

diff --git a/examples/text_graph/erniesage/config/erniesage_link_prediction.yaml b/examples/text_graph/erniesage/config/erniesage_link_prediction.yaml

deleted file mode 100644

index 970f5de365b7..000000000000

--- a/examples/text_graph/erniesage/config/erniesage_link_prediction.yaml

+++ /dev/null

@@ -1,40 +0,0 @@

-# Global Environment Settings

-

-# trainer config ------

-device: "gpu" # use cpu or gpu devices to train.

-seed: 2020

-

-task: "link_prediction"

-model_name_or_path: "ernie-tiny" # ernie-tiny or ernie-1.0 avaiable

-sample_workers: 1

-optimizer_type: "adam"

-lr: 0.00005

-batch_size: 32

-CPU_NUM: 10

-epoch: 30

-log_per_step: 10

-save_per_step: 200

-output_path: "./output"

-

-# data config ------

-train_data: "./example_data/graph_data.txt"

-graph_data: "./example_data/train_data.txt"

-graph_work_path: "./graph_workdir"

-input_type: "text"

-encoding: "utf8"

-

-# model config ------

-samples: [10]

-model_type: "ErnieSageV2"

-max_seqlen: 40

-num_layers: 1

-hidden_size: 128

-final_fc: true

-final_l2_norm: true

-loss_type: "hinge"

-margin: 0.1

-neg_type: "batch_neg"

-

-# infer config ------

-infer_model: "./output/last"

-infer_batch_size: 128

diff --git a/examples/text_graph/erniesage/data/dataset.py b/examples/text_graph/erniesage/data/dataset.py

deleted file mode 100644

index 2a3733851e63..000000000000

--- a/examples/text_graph/erniesage/data/dataset.py

+++ /dev/null

@@ -1,115 +0,0 @@

-# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import os

-

-import numpy as np

-import paddle

-import pgl

-from paddle.io import Dataset

-from pgl.sampling import graphsage_sample

-

-__all__ = [

- "TrainData",

- "PredictData",

- "batch_fn",

-]

-

-

-class TrainData(Dataset):

- def __init__(self, graph_work_path):

- trainer_id = paddle.distributed.get_rank()

- trainer_count = paddle.distributed.get_world_size()

- print("trainer_id: %s, trainer_count: %s." % (trainer_id, trainer_count))

-

- edges = np.load(os.path.join(graph_work_path, "train_data.npy"), allow_pickle=True)

- # edges is bidirectional.

- train_src = edges[trainer_id::trainer_count, 0]

- train_dst = edges[trainer_id::trainer_count, 1]

- returns = {"train_data": [train_src, train_dst]}

-

- if os.path.exists(os.path.join(graph_work_path, "neg_samples.npy")):

- neg_samples = np.load(os.path.join(graph_work_path, "neg_samples.npy"), allow_pickle=True)

- if neg_samples.size != 0:

- train_negs = neg_samples[trainer_id::trainer_count]

- returns["train_data"].append(train_negs)

- print("Load train_data done.")

- self.data = returns

-

- def __getitem__(self, index):

- return [data[index] for data in self.data["train_data"]]

-

- def __len__(self):

- return len(self.data["train_data"][0])

-

-

-class PredictData(Dataset):

- def __init__(self, num_nodes):

- trainer_id = paddle.distributed.get_rank()

- trainer_count = paddle.distributed.get_world_size()

- self.data = np.arange(trainer_id, num_nodes, trainer_count)

-

- def __getitem__(self, index):

- return [self.data[index], self.data[index]]

-

- def __len__(self):

- return len(self.data)

-

-

-def batch_fn(batch_ex, samples, base_graph, term_ids):

- batch_src = []

- batch_dst = []

- batch_neg = []

- for batch in batch_ex:

- batch_src.append(batch[0])

- batch_dst.append(batch[1])

- if len(batch) == 3: # default neg samples

- batch_neg.append(batch[2])

-

- batch_src = np.array(batch_src, dtype="int64")

- batch_dst = np.array(batch_dst, dtype="int64")

- if len(batch_neg) > 0:

- batch_neg = np.unique(np.concatenate(batch_neg))

- else:

- batch_neg = batch_dst

-

- nodes = np.unique(np.concatenate([batch_src, batch_dst, batch_neg], 0))

- subgraphs = graphsage_sample(base_graph, nodes, samples)

-

- subgraph, sample_index, node_index = subgraphs[0]

- from_reindex = {int(x): i for i, x in enumerate(sample_index)}

-

- term_ids = term_ids[sample_index].astype(np.int64)

-

- sub_src_idx = pgl.graph_kernel.map_nodes(batch_src, from_reindex)

- sub_dst_idx = pgl.graph_kernel.map_nodes(batch_dst, from_reindex)

- sub_neg_idx = pgl.graph_kernel.map_nodes(batch_neg, from_reindex)

-

- user_index = np.array(sub_src_idx, dtype="int64")

- pos_item_index = np.array(sub_dst_idx, dtype="int64")

- neg_item_index = np.array(sub_neg_idx, dtype="int64")

-

- user_real_index = np.array(batch_src, dtype="int64")

- pos_item_real_index = np.array(batch_dst, dtype="int64")

-

- return (

- np.array([subgraph.num_nodes], dtype="int32"),

- subgraph.edges.astype("int32"),

- term_ids,

- user_index,

- pos_item_index,

- neg_item_index,

- user_real_index,

- pos_item_real_index,

- )

diff --git a/examples/text_graph/erniesage/data/graph_reader.py b/examples/text_graph/erniesage/data/graph_reader.py

deleted file mode 100755

index ca82d5c78f66..000000000000

--- a/examples/text_graph/erniesage/data/graph_reader.py

+++ /dev/null

@@ -1,59 +0,0 @@

-# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-import pgl

-from paddle.io import DataLoader

-

-__all__ = ["GraphDataLoader"]

-

-

-class GraphDataLoader(object):

- def __init__(self, dataset, batch_size=1, shuffle=True, num_workers=1, collate_fn=None, **kwargs):

- self.loader = DataLoader(

- dataset=dataset,

- batch_size=batch_size,

- shuffle=shuffle,

- num_workers=num_workers,

- collate_fn=collate_fn,

- **kwargs,

- )

-

- def __iter__(self):

- func = self.__callback__()

- for data in self.loader():

- yield func(data)

-

- def __call__(self):

- return self.__iter__()

-

- def __callback__(self):

- """callback function, for recontruct a dict or graph."""

-

- def construct(tensors):

- """tensor list to ([graph_tensor, graph_tensor, ...],

- other tensor)

- """

- graph_num = 1

- start_len = 0

- data = []

- graph_list = []

- for graph in range(graph_num):

- graph_list.append(pgl.Graph(num_nodes=tensors[start_len], edges=tensors[start_len + 1]))

- start_len += 2

-

- for i in range(start_len, len(tensors)):

- data.append(tensors[i])

- return graph_list, data

-

- return construct

diff --git a/examples/text_graph/erniesage/example_data/graph_data.txt b/examples/text_graph/erniesage/example_data/graph_data.txt

deleted file mode 100644

index e9aead6c89fa..000000000000

--- a/examples/text_graph/erniesage/example_data/graph_data.txt

+++ /dev/null

@@ -1,1000 +0,0 @@

-黑缘粗角肖叶甲触角有多大? 体长卵形,棕红色;鞘翅棕黄或淡棕色,外缘和中缝黑色或黑褐色;触角基部3、4节棕黄,余节棕色。

-黑缘粗角肖叶甲触角有多大? 头部刻点粗大,分布不均匀,头顶刻点十分稀疏;触角基部的内侧有一个三角形光瘤,唇基前缘呈半圆形凹切。

-黑缘粗角肖叶甲触角有多大? 触角近于体长之半,第1节粗大,棒状,第2节短,椭圆形,3、4两节细长,稍短于第5节,第5节基细端粗,末端6节明显粗大。

-黑缘粗角肖叶甲触角有多大? 前胸背板横宽,宽约为长的两倍,侧缘敞出较宽,圆形,敞边与盘区之间有一条细纵沟;盘区刻点相当密,前半部刻点较大于后半部。

-黑缘粗角肖叶甲触角有多大? 小盾片舌形,光亮,末端圆钝。

-黑缘粗角肖叶甲触角有多大? 鞘翅刻点粗大,不规则排列,肩部之后的刻点更为粗大,具皱褶,近中缝的刻点较小,略呈纵行排列。

-黑缘粗角肖叶甲触角有多大? 前胸前侧片前缘直;前胸后侧片具粗大刻点。

-黑缘粗角肖叶甲触角有多大? 足粗壮;胫节具纵脊,外端角向外延伸,呈弯角状;爪具附齿。

-暮光闪闪的姐姐是谁? 暮光闪闪是一匹雌性独角兽,后来在神秘魔法的影响下变成了空角兽(公主),她是《我的小马驹:友情是魔法》(英文名:My Little Pony:Friendship is Magic)中的主角之一。

-暮光闪闪的姐姐是谁? 她是银甲闪闪(Shining Armor)的妹妹,同时也是韵律公主(Princess Cadance)的小姑子。

-暮光闪闪的姐姐是谁? 在该系列中,她与最好的朋友与助手斯派克(Spike)一起生活在小马镇(Ponyville)的金橡图书馆(Golden Oak Library),研究友谊的魔法。

-暮光闪闪的姐姐是谁? 在暮光闪闪成为天角兽之前(即S3E13前),常常给塞拉丝蒂娅公主(Princess Celestia)关于友谊的报告。[1]

-暮光闪闪的姐姐是谁? 《我的小马驹:友谊是魔法》(英文名称:My Little Pony:Friendship is Magic)(简称MLP)

-暮光闪闪的姐姐是谁? 动画讲述了一只名叫做暮光闪闪(Twilight Sparkle)的独角兽(在SE3E13

-暮光闪闪的姐姐是谁? My Little Pony:Friendship is Magic[2]

-暮光闪闪的姐姐是谁? 后成为了天角兽),执行她的导师塞拉斯蒂娅公主(PrincessCelestia)的任务,在小马镇(Ponyville)学习关于友谊的知识。

-暮光闪闪的姐姐是谁? 她与另外五只小马,苹果杰克(Applejack)、瑞瑞(Rarity)、云宝黛西(Rainbow Dash)、小蝶(Fluttershy)与萍琪派(Pinkie Pie),成为了最要好的朋友。

-暮光闪闪的姐姐是谁? 每匹小马都分别代表了协律精华的6个元素:诚实,慷慨,忠诚,善良,欢笑,魔法,各自扮演着属于自己的重要角色。

-暮光闪闪的姐姐是谁? 此后,暮光闪闪(Twilight Sparkle)便与她认识的新朋友们开始了有趣的日常生活。

-暮光闪闪的姐姐是谁? 在动画中,随时可见她们在小马镇(Ponyville)的种种冒险、奇遇、日常等等。

-暮光闪闪的姐姐是谁? 同时,也在她们之间的互动和冲突中,寻找着最适合最合理的完美解决方案。

-暮光闪闪的姐姐是谁? “尽管小马国并不太平,六位主角之间也常常有这样那样的问题,但是他们之间的真情对待,使得这个童话世界已经成为不少人心中理想的世外桃源。”

-暮光闪闪的姐姐是谁? 暮光闪闪在剧情刚开始的时候生活在中心城(Canterlot),后来在夏日

-暮光闪闪的姐姐是谁? 暮光闪闪与斯派克(Spike)

-暮光闪闪的姐姐是谁? 庆典的时候被塞拉丝蒂娅公主派遣到小马镇执行检查夏日庆典的准备工作的任务。

-暮光闪闪的姐姐是谁? 在小马镇交到了朋友(即其余5个主角),并和她们一起使用协律精华(Elements of harmony)击败了梦魇之月。

-暮光闪闪的姐姐是谁? 并在塞拉丝蒂亚公主的许可下,留在小马镇继续研究友谊的魔法。

-暮光闪闪的姐姐是谁? 暮光闪闪的知识基本来自于书本,并且她相当不相信书本以外的“迷信”,因为这样她在S1E15里吃足了苦头。

-暮光闪闪的姐姐是谁? 在这之后,她也开始慢慢学会相信一些书本以外的东西。

-暮光闪闪的姐姐是谁? 暮光闪闪热爱学习,并且学习成绩相当好(从她可以立刻算出

-暮光闪闪的姐姐是谁? 的结果可以看

-暮光闪闪的姐姐是谁? 暮光闪闪的原型

-暮光闪闪的姐姐是谁? 出)。

-暮光闪闪的姐姐是谁? 相当敬爱自己的老师塞拉丝蒂亚公主甚至到了精神失常的地步。

-暮光闪闪的姐姐是谁? 在第二季中,曾因为无法交出关于友谊的报告而做出了疯狂的行为,后来被塞拉丝蒂亚公主制止,在这之后,暮光闪闪得到了塞拉丝蒂亚公主“不用定期交友谊报告”的许可。

-暮光闪闪的姐姐是谁? 于是暮光闪闪在后面的剧情中的主角地位越来越得不到明显的体现。

-暮光闪闪的姐姐是谁? 在SE3E13中,因为破解了白胡子星璇留下的神秘魔法而被加冕成为了天角兽(公主),被尊称为“闪闪公主”。

-暮光闪闪的姐姐是谁? 当小星座熊在小马镇引起恐慌的时候,暮光闪闪运用了自身强大的魔法将水库举起后装满牛奶,用牛奶将小星座熊安抚后,连着巨型奶瓶和小星座熊一起送回了小星座熊居住的山洞。

-我想知道红谷十二庭有哪些金融机构? 红谷十二庭是由汪氏集团旗下子公司江西尤金房地产开发有限公司携手城发投资共同开发的精品社区,项目占地面积约380亩,总建筑面积约41万平方米。

-我想知道红谷十二庭有哪些金融机构? 项目以建设人文型、生态型居住环境为规划目标;创造一个布局合理、功能齐全、交通便捷、绿意盎然、生活方便,有文化内涵的居住区。

-我想知道红谷十二庭有哪些金融机构? 金融机构:工商银行、建设银行、农业银行、中国银行红谷滩支行、商业银行红谷滩支行等

-我想知道红谷十二庭有哪些金融机构? 周边公园:沿乌砂河50米宽绿化带、乌砂河水岸公园、秋水广场、赣江市民公园

-我想知道红谷十二庭有哪些金融机构? 周边医院:新建县人民医院、开心人药店、中寰医院

-我想知道红谷十二庭有哪些金融机构? 周边学校:育新小学红谷滩校区、南师附小红谷滩校区、实验小学红谷滩校区中学:南昌二中红谷滩校区、南昌五中、新建二中、竞秀贵族学校

-我想知道红谷十二庭有哪些金融机构? 周边公共交通:112、204、211、219、222、227、238、501等20多辆公交车在本项目社区门前停靠

-我想知道红谷十二庭有哪些金融机构? 红谷十二庭处在南昌一江两城中的西城中心,位属红谷滩CBD文化公园中心——马兰圩中心组团,红谷滩中心区、红角洲、新建县三区交汇处,南临南期友好路、东接红谷滩中心区、西靠乌砂河水岸公园(50米宽,1000米长)。

-我想知道红谷十二庭有哪些金融机构? 交通便捷,景观资源丰富,生活配套设施齐全,出则繁华,入则幽静,是现代人居的理想地段。

-我想知道红谷十二庭有哪些金融机构? 红谷十二庭户型图

-苏琳最开始进入智通实业是担任什么职位? 现任广东智通人才连锁股份有限公司总裁,清华大学高级工商管理硕士。

-苏琳最开始进入智通实业是担任什么职位? 1994年,加入智通实业,从总经理秘书做起。

-苏琳最开始进入智通实业是担任什么职位? 1995年,智通实业决定进入人才服务行业,被启用去负责新公司的筹建及运营工作,在苏琳的努力下,智通人才智力开发有限公司成立。

-苏琳最开始进入智通实业是担任什么职位? 2003年,面对同城对手的激烈竞争,苏琳冷静对待,领导智通先后接管、并购了同城的腾龙、安达盛人才市场,,“品牌运作,连锁经营,差异制胜”成为苏琳屡屡制胜的法宝。

-苏琳最开始进入智通实业是担任什么职位? 2006年,苏琳先是将智通人才升级为“东莞市智通人才连锁有限公司”,一举成为广东省人才市场目前惟一的连锁机构,随后在东莞同时开设长安、松山湖、清溪等镇区分部,至此智通在东莞共有6个分部。

-苏琳最开始进入智通实业是担任什么职位? 一番大刀阔斧完成东莞布局后,苏琳确定下一个更为高远的目标——进军珠三角,向全国发展连锁机构。

-苏琳最开始进入智通实业是担任什么职位? 到2011年末,苏琳领导的智通人才已在珠三角的东莞、佛山、江门、中山等地,长三角的南京、宁波、合肥等地,中西部的南昌、长沙、武汉、重庆、西安等地设立了20多家连锁经营网点。

-苏琳最开始进入智通实业是担任什么职位? 除了财务副总裁之外,苏琳是智通人才核心管理高层当中唯一的女性,不管是要约采访的记者还是刚刚加入智通的员工,见到苏琳的第一面,都会有一种惊艳的感觉,“一位女企业家居然非常美丽和时尚?!”

-苏琳最开始进入智通实业是担任什么职位? 智通管理高层的另外6位男性成员,有一次同时接受一家知名媒体采访时,共同表达了对自己老板的“爱慕”之情,苏琳听后莞尔一笑,指着在座的这几位高层说道“其实,我更爱他们!”

-苏琳最开始进入智通实业是担任什么职位? 这种具有独特领导魅力的表述让这位记者唏嘘不已,同时由这样的一个细节让他感受到了智通管理团队的协作力量。

-谁知道黄沙中心小学的邮政编码是多少? 学校于1954年始建于棕树湾村,当时借用一间民房做教室,取名为“黄沙小学”,只有教师1人,学生8人。

-谁知道黄沙中心小学的邮政编码是多少? 1958年在大跃进精神的指导下,实行大集体,全乡集中办学,发展到12个班,300多学生,20名教职工。

-谁知道黄沙中心小学的邮政编码是多少? 1959年解散。

-谁知道黄沙中心小学的邮政编码是多少? 1959年下半年,在上级的扶持下,建了6间木房,搬到1960年学校所在地,有6名教师,3个班,60名学生。

-谁知道黄沙中心小学的邮政编码是多少? 1968年,开始招收一个初中班,“黄沙小学”改名为 “附小”。

-谁知道黄沙中心小学的邮政编码是多少? 当时已发展到5个班,8名教师,110多名学生。

-谁知道黄沙中心小学的邮政编码是多少? 增建土木结构教室两间。

-谁知道黄沙中心小学的邮政编码是多少? 1986年,初中、小学分开办学。

-谁知道黄沙中心小学的邮政编码是多少? 增建部分教师宿舍和教室,办学条件稍有改善,学校初具规模。

-谁知道黄沙中心小学的邮政编码是多少? 1996年,我校在市、县领导及希望工程主管部门的关怀下,决定改为“黄沙希望小学”并拨款32万元,新建一栋4层,12间教室的教学楼,教学条件大有改善。

-谁知道黄沙中心小学的邮政编码是多少? 当时发展到10个班,学生300多人,教职工19人,小学高级教师3人,一级教师7人,二级教师9人。

-谁知道黄沙中心小学的邮政编码是多少? 2003年下半年由于农村教育体制改革,撤销教育组,更名为“黄沙中心小学”。

-谁知道黄沙中心小学的邮政编码是多少? 学校现有在校生177人(含学前42人),设有学前至六年级共7个教学班。

-谁知道黄沙中心小学的邮政编码是多少? 有教师19人,其中大专以上学历11人,中师6人;小学高级教师14人,一级教师5人。

-谁知道黄沙中心小学的邮政编码是多少? 学校校园占地面积2050平方米,生均达15.29平方米,校舍建筑面积1645平方米,生均12.27平方米;设有教师办公室、自然实验、电教室(合二为一)、微机室、图书阅览室(合二为一)、体育室、广播室、少先队活动室。

-谁知道黄沙中心小学的邮政编码是多少? 广西壮族自治区桂林市临桂县黄沙瑶族乡黄沙街 邮编:541113[1]

-伊藤实华的职业是什么? 伊藤实华(1984年3月25日-)是日本的女性声优。

-伊藤实华的职业是什么? THREE TREE所属,东京都出身,身长149cm,体重39kg,血型AB型。

-伊藤实华的职业是什么? ポルノグラフィティのLION(森男)

-伊藤实华的职业是什么? 2000年

-伊藤实华的职业是什么? 犬夜叉(枫(少女时代))

-伊藤实华的职业是什么? 幻影死神(西亚梨沙)

-伊藤实华的职业是什么? 2001年

-伊藤实华的职业是什么? NOIR(ロザリー)

-伊藤实华的职业是什么? 2002年

-伊藤实华的职业是什么? 水瓶战记(柠檬)

-伊藤实华的职业是什么? 返乡战士(エイファ)

-伊藤实华的职业是什么? 2003年

-伊藤实华的职业是什么? 奇诺之旅(女子A(悲しい国))

-伊藤实华的职业是什么? 2004年

-伊藤实华的职业是什么? 爱你宝贝(坂下ミキ)

-伊藤实华的职业是什么? Get Ride! アムドライバー(イヴァン・ニルギース幼少期)

-伊藤实华的职业是什么? スクールランブル(花井春树(幼少时代))

-伊藤实华的职业是什么? 2005年

-伊藤实华的职业是什么? 光速蒙面侠21(虎吉)

-伊藤实华的职业是什么? 搞笑漫画日和(男子トイレの精、パン美先生)

-伊藤实华的职业是什么? 银牙伝说WEED(テル)

-伊藤实华的职业是什么? 魔女的考验(真部カレン、守山太郎)

-伊藤实华的职业是什么? BUZZER BEATER(レニー)

-伊藤实华的职业是什么? 虫师(“眼福眼祸”さき、“草を踏む音”沢(幼少时代))

-伊藤实华的职业是什么? 2006年

-伊藤实华的职业是什么? 魔女之刃(娜梅)

-伊藤实华的职业是什么? 反斗小王子(远藤レイラ)

-伊藤实华的职业是什么? 搞笑漫画日和2(パン美先生、フグ子、ダンサー、ヤマトの妹、女性)

-伊藤实华的职业是什么? 人造昆虫カブトボーグ V×V(ベネチアンの弟、东ルリ、园儿A)

-伊藤实华的职业是什么? 2007年

-爆胎监测与安全控制系统英文是什么? 爆胎监测与安全控制系统(Blow-out Monitoring and Brake System),是吉利全球首创,并拥有自主知识产权及专利的一项安全技术。

-爆胎监测与安全控制系统英文是什么? 这项技术主要是出于防止高速爆胎所导致的车辆失控而设计。

-爆胎监测与安全控制系统英文是什么? BMBS爆胎监测与安全控制系统技术于2004年1月28日正式获得中国发明专利授权。

-爆胎监测与安全控制系统英文是什么? 2008年第一代BMBS系统正式与世人见面,BMBS汇集国内外汽车力学、控制学、人体生理学、电子信息学等方面的专家和工程技术人员经过一百余辆试验车累计行程超过五百万公里的可靠性验证,以确保产品的可靠性。

-爆胎监测与安全控制系统英文是什么? BMBS技术方案的核心即是采用智能化自动控制系统,弥补驾驶员生理局限,在爆胎后反应时间为0.5秒,替代驾驶员实施行车制动,保障行车安全。

-爆胎监测与安全控制系统英文是什么? BMBS系统由控制系统和显示系统两大部分组成,控制系统由BMBS开关、BMBS主机、BMBS分机、BMBS真空助力器四部分组成;显示系统由GPS显示、仪表指示灯、语言提示、制动双闪灯组成。

-爆胎监测与安全控制系统英文是什么? 当轮胎气压高于或低于限值时,控制器声光提示胎压异常。

-爆胎监测与安全控制系统英文是什么? 轮胎温度过高时,控制器发出信号提示轮胎温度过高。

-爆胎监测与安全控制系统英文是什么? 发射器电量不足时,控制器显示低电压报警。

-爆胎监测与安全控制系统英文是什么? 发射器受到干扰长期不发射信号时,控制器显示无信号报警。

-爆胎监测与安全控制系统英文是什么? 当汽车电门钥匙接通时,BMBS首先进入自检程序,检测系统各部分功能是否正常,如不正常,BMBS报警灯常亮。

-走读干部现象在哪里比较多? 走读干部一般是指县乡两级干部家住县城以上的城市,本人在县城或者乡镇工作,要么晚出早归,要么周一去单位上班、周五回家过周末。

-走读干部现象在哪里比较多? 对于这种现象,社会上的议论多是批评性的,认为这些干部脱离群众、作风漂浮、官僚主义,造成行政成本增加和腐败。

-走读干部现象在哪里比较多? 截至2014年10月,共有6484名“走读干部”在专项整治中被查处。

-走读干部现象在哪里比较多? 这是中央首次大规模集中处理这一长期遭诟病的干部作风问题。

-走读干部现象在哪里比较多? 干部“走读”问题主要在乡镇地区比较突出,城市地区则较少。

-走读干部现象在哪里比较多? 从历史成因和各地反映的情况来看,产生“走读”现象的主要原因大致有四种:

-走读干部现象在哪里比较多? 现今绝大多数乡村都有通往乡镇和县城的石子公路甚至柏油公路,这无疑为农村干部的出行创造了便利条件,为“干部像候鸟,频往家里跑”创造了客观条件。

-走读干部现象在哪里比较多? 选调生、公务员队伍大多是学历较高的大学毕业生,曾在高校所在地的城市生活,不少人向往城市生活,他们不安心长期扎根基层,而是将基层当作跳板,因此他们往往成为“走读”的主力军。

-走读干部现象在哪里比较多? 公仆意识、服务意识淡化,是“走读”现象滋生的主观原因。

-走读干部现象在哪里比较多? 有些党员干部感到自己长期在基层工作,该为自己和家庭想想了。

-走读干部现象在哪里比较多? 于是,不深入群众认真调查研究、认真听取群众意见、认真解决群众的实际困难,也就不难理解了。

-走读干部现象在哪里比较多? 县级党政组织对乡镇领导干部管理的弱化和为基层服务不到位,导致“走读”问题得不到应有的制度约束,是“走读”问题滋长的组织原因。[2]

-走读干部现象在哪里比较多? 近些年来,我国一些地方的“干部走读”现象较为普遍,社会上对此议走读干部论颇多。

-走读干部现象在哪里比较多? 所谓“干部走读”,一般是指县乡两级干部家住县城以上的城市,本人在县城或者乡镇工作,要么早出晚归,要么周一去单位上班、周五回家过周末。

-走读干部现象在哪里比较多? 对于这种现象,社会上的议论多是批评性的,认为这些干部脱离群众、作风漂浮、官僚主义,造成行政成本增加和腐败。

-走读干部现象在哪里比较多? 干部走读之所以成为“千夫所指”,是因为这种行为增加了行政成本。

-走读干部现象在哪里比较多? 从根子上说,干部走读是城乡发展不平衡的产物,“人往高处走,水往低处流”,有了更加舒适的生活环境,不管是为了自己生活条件改善也好,还是因为子女教育也好,农村人口向城镇转移,这是必然结果。

-走读干部现象在哪里比较多? “干部走读”的另一个重要原因,是干部人事制度改革。

-走读干部现象在哪里比较多? 目前公务员队伍“凡进必考”,考上公务员的大多是学历较高的大学毕业生,这些大学毕业生来自各个全国各地,一部分在本地结婚生子,沉淀下来;一部分把公务员作为跳板,到基层后或考研,或再参加省考、国考,或想办法调回原籍。

-走读干部现象在哪里比较多? 再加上一些下派干部、异地交流任职干部,构成了看似庞大的“走读”队伍。

-走读干部现象在哪里比较多? 那么,“干部走读”有哪些弊端呢?

-走读干部现象在哪里比较多? 一是这些干部人在基层,心在城市,缺乏长期作战的思想,工作不安心。

-走读干部现象在哪里比较多? 周一来上班,周五回家转,对基层工作缺乏热情和感情;二是长期在省市直机关工作,对基层工作不熟悉不了解,工作不热心;三是长期走读,基层干群有工作难汇报,有困难难解决,群众不开心;四是干部来回走读,公车私驾,私费公报,把大量的经济负担转嫁给基层;五是对这些走读干部,基层管不了,上级监督难,节假日期间到哪里去、做什么事,基本处于失控和真空状态,各级组织和基层干群不放心。

-走读干部现象在哪里比较多? 特别需要引起警觉的是,由于少数走读干部有临时思想,满足于“当维持会长”,得过且过混日子,热衷于做一些急功近利、砸锅求铁的短期行为和政绩工程,不愿做打基础、管长远的实事好事,甚至怠政、疏政和懒于理政,影响了党和政府各项方针政策措施的落实,导致基层无政府主义、自由主义抬头,削弱了党和政府的领导,等到矛盾激化甚至不可收拾的时候,处理已是来之不及。

-走读干部现象在哪里比较多? 权利要与义务相等,不能只有义务而没有权利,或是只有权利没有义务。

-走读干部现象在哪里比较多? 如何真正彻底解决乡镇干部“走读”的现象呢?

-走读干部现象在哪里比较多? 那就必须让乡镇基层干部义务与权利相等。

-走读干部现象在哪里比较多? 如果不能解决基层干部待遇等问题,即使干部住村,工作上也不会有什么进展的。

-走读干部现象在哪里比较多? 所以,在政治上关心,在生活上照顾,在待遇上提高。

-走读干部现象在哪里比较多? 如,提高基层干部的工资待遇,增加通讯、交通补助;帮助解决子女入学及老人赡养问题;提拔干部优先考虑基层干部;干部退休时的待遇至少不低于机关干部等等。

-化州市良光镇东岸小学学风是什么? 学校全体教职工爱岗敬业,团结拼搏,勇于开拓,大胆创新,进行教育教学改革,努力开辟第二课堂的教学路子,并开通了网络校校通的交流合作方式。

-化州市良光镇东岸小学学风是什么? 现学校教师正在为创建安全文明校园而努力。

-化州市良光镇东岸小学学风是什么? 东岸小学位置偏僻,地处贫穷落后,是良光镇最偏远的学校,学校,下辖分教点——东心埇小学,[1]?。

-化州市良光镇东岸小学学风是什么? 学校2011年有教师22人,学生231人。

-化州市良光镇东岸小学学风是什么? 小学高级教师8人,小学一级教师10人,未定级教师4人,大专学历的教师6人,其余的都具有中师学历。

-化州市良光镇东岸小学学风是什么? 全校共设12个班,学校课程按标准开设。

-化州市良光镇东岸小学学风是什么? 东岸小学原来是一所破旧不堪,教学质量非常差的薄弱学校。

-化州市良光镇东岸小学学风是什么? 近几年来,在各级政府、教育部门及社会各界热心人士鼎力支持下,学校领导大胆改革创新,致力提高教学质量和教师水平,并加大经费投入,大大改善了办学条件,使学校由差变好,实现了大跨越。

-化州市良光镇东岸小学学风是什么? 学校建设性方面。

-化州市良光镇东岸小学学风是什么? 东岸小学属于革命老区学校,始建于1980年,从东心埇村祠堂搬到这个校址,1990年建造一幢建筑面积为800平方米的南面教学楼, 1998年老促会支持从北面建造一幢1800平方米的教学大楼。

-化州市良光镇东岸小学学风是什么? 学校在管理方面表现方面颇具特色,实现了各项制度的日常化和规范化。

-化州市良光镇东岸小学学风是什么? 学校领导有较强的事业心和责任感,讲求民主与合作,勤政廉政,依法治校,树立了服务意识。

-化州市良光镇东岸小学学风是什么? 学校一贯实施“德育为先,以人为本”的教育方针,制定了“团结,律已,拼搏,创新”的校训。

-化州市良光镇东岸小学学风是什么? 教育风为“爱岗敬业,乐于奉献”,学风为“乐学,勤学,巧学,会学”。

-化州市良光镇东岸小学学风是什么? 校内营造了尊师重教的氛围,形成了良好的校风和学风。

-化州市良光镇东岸小学学风是什么? 教师们爱岗敬业,师德高尚,治学严谨,教研教改气氛浓厚,获得喜人的教研成果。

-化州市良光镇东岸小学学风是什么? 近几年来,教师撰写的教育教学论文共10篇获得县市级以上奖励,获了镇级以上奖励的有100人次。

-化州市良光镇东岸小学学风是什么? 学校德育工作成绩显著,多年被评为“安全事故为零”的学校,良光镇先进学校。

-化州市良光镇东岸小学学风是什么? 特别是教学质量大大提高了。

-化州市良光镇东岸小学学风是什么? 这些成绩得到了上级及群众的充分肯定。

-化州市良光镇东岸小学学风是什么? 1.学校环境欠美观有序,学校大门口及校道有待改造。

-化州市良光镇东岸小学学风是什么? 2.学校管理制度有待改进,部分教师业务水平有待提高。

-化州市良光镇东岸小学学风是什么? 3.教师宿舍、教室及学生宿舍欠缺。

-化州市良光镇东岸小学学风是什么? 4.运动场不够规范,各类体育器材及设施需要增加。

-化州市良光镇东岸小学学风是什么? 5.学生活动空间少,见识面窄,视野不够开阔。

-化州市良光镇东岸小学学风是什么? 1.努力营造和谐的教育教学新气氛。

-化州市良光镇东岸小学学风是什么? 建立科学的管理制度,坚持“与时俱进,以人为本”,真正实现领导对教师,教师对学生之间进行“德治与情治”;学校的人文环境做到“文明,和谐,清新”;德育环境做到“自尊,律已,律人”;心理环境做到“安全,谦虚,奋发”;交际环境做到“团结合作,真诚助人”;景物环境做到“宜人,有序。”

-化州市良光镇东岸小学学风是什么? 营造学校与育人的新特色。

-我很好奇发射管的输出功率怎么样? 产生或放大高频功率的静电控制电子管,有时也称振荡管。

-我很好奇发射管的输出功率怎么样? 用于音频或开关电路中的发射管称调制管。

-我很好奇发射管的输出功率怎么样? 发射管是无线电广播、通信、电视发射设备和工业高频设备中的主要电子器件。

-我很好奇发射管的输出功率怎么样? 输出功率和工作频率是发射管的基本技术指标。

-我很好奇发射管的输出功率怎么样? 广播、通信和工业设备的发射管,工作频率一般在30兆赫以下,输出功率在1919年为2千瓦以下,1930年达300千瓦,70年代初已超过1000千瓦,效率高达80%以上。

-我很好奇发射管的输出功率怎么样? 发射管工作频率提高时,输出功率和效率都会降低,因此1936年首次实用的脉冲雷达工作频率仅28兆赫,80年代则已达 400兆赫以上。

-我很好奇发射管的输出功率怎么样? 40年代电视发射管的工作频率为数十兆赫,而80年代初,优良的电视发射管可在1000兆赫下工作,输出功率达20千瓦,效率为40%。

-我很好奇发射管的输出功率怎么样? 平面电极结构的小功率发射三极管可在更高的频率下工作。

-我很好奇发射管的输出功率怎么样? 发射管多采用同心圆筒电极结构。

-我很好奇发射管的输出功率怎么样? 阴极在最内层,向外依次为各个栅极和阳极。

-我很好奇发射管的输出功率怎么样? 图中,自左至右为阴极、第一栅、第二栅、栅极阴极组装件和装入阳极后的整个管子。

-我很好奇发射管的输出功率怎么样? 发射管

-我很好奇发射管的输出功率怎么样? 中小功率发射管多采用间热式氧化物阴极。

-我很好奇发射管的输出功率怎么样? 大功率发射管一般采用碳化钍钨丝阴极,有螺旋、直条或网笼等结构形式。

-我很好奇发射管的输出功率怎么样? 图为网笼式阴极。

-我很好奇发射管的输出功率怎么样? 栅极多用钼丝或钨丝绕制,或用钼片经电加工等方法制造。

-我很好奇发射管的输出功率怎么样? 栅极表面经镀金(或铂)或涂敷锆粉等处理,以降低栅极电子发射,使发射管稳定工作。

-我很好奇发射管的输出功率怎么样? 用气相沉积方法制造的石墨栅极,具有良好的性能。

-我很好奇发射管的输出功率怎么样? 发射管阳极直流输入功率转化为高频输出功率的部分约为75%,其余25%成为阳极热损耗,因此对发射管的阳极必须进行冷却。

-我很好奇发射管的输出功率怎么样? 中小功率发射管的阳极采取自然冷却方式,用镍、钼或石墨等材料制造,装在管壳之内,工作温度可达 600℃。

-我很好奇发射管的输出功率怎么样? 大功率发射管的阳极都用铜制成,并作为真空密封管壳的一部分,采用各种强制冷却方式。

-我很好奇发射管的输出功率怎么样? 各种冷却方式下每平方厘米阳极内表面的散热能力为:水冷100瓦;风冷30瓦;蒸发冷却250瓦;超蒸发冷却1000瓦以上,80年代已制成阳极损耗功率为1250千瓦的超蒸发冷却发射管。

-我很好奇发射管的输出功率怎么样? 发射管也常以冷却方式命名,如风冷发射管、水冷发射管和蒸发冷却发射管。

-我很好奇发射管的输出功率怎么样? 发射管管壳用玻璃或陶瓷制造。

-我很好奇发射管的输出功率怎么样? 小功率发射管内使用含钡的吸气剂;大功率发射管则采用锆、钛、钽等吸气材料,管内压强约为10帕量级。

-我很好奇发射管的输出功率怎么样? 发射管寿命取决于阴极发射电子的能力。

-我很好奇发射管的输出功率怎么样? 大功率发射管寿命最高记录可达8万小时。

-我很好奇发射管的输出功率怎么样? 发射四极管的放大作用和输出输入电路间的隔离效果优于三极管,应用最广。

-我很好奇发射管的输出功率怎么样? 工业高频振荡器普遍采用三极管。

-我很好奇发射管的输出功率怎么样? 五极管多用在小功率范围中。

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 鲁能领秀城中央公园位于鲁能领秀城景观中轴之上,总占地161.55亩,总建筑面积约40万平米,容积率为2.70,由22栋小高层、高层组成;其绿地率高达35.2%,环境优美,产品更加注重品质化、人性化和自然生态化,是鲁能领秀城的生态人居典范。

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 中央公园[1] 学区准现房,坐享鲁能领秀城成熟配套,成熟生活一步到位。

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 经典板式小高层,103㎡2+1房仅22席,稀市推出,错过再无;92㎡经典两房、137㎡舒适三房压轴登场!

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 物业公司:

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 济南凯瑞物业公司;深圳长城物业公司;北京盛世物业有限公司

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 绿化率:

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 42%

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 容积率:

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 2.70

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 暖气:

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 集中供暖

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 楼座展示:中央公园由22栋小高层、高层组成,3、16、17号楼分别是11层小高层,18层和28层的高层。

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 4号楼是23层,2梯3户。

-鲁能领秀城中央公园有23层,2梯3户的是几号楼? 项目位置:

-鬼青蛙在哪里有收录详情? 鬼青蛙这张卡可以从手卡把这张卡以外的1只水属性怪兽丢弃,从手卡特殊召唤。

-鬼青蛙在哪里有收录详情? 这张卡召唤·反转召唤·特殊召唤成功时,可以从自己的卡组·场上选1只水族·水属性·2星以下的怪兽送去墓地。

-鬼青蛙在哪里有收录详情? 此外,1回合1次,可以通过让自己场上1只怪兽回到手卡,这个回合通常召唤外加上只有1次,自己可以把「鬼青蛙」以外的1只名字带有「青蛙」的怪兽召唤。[1]

-鬼青蛙在哪里有收录详情? 游戏王卡包收录详情

-鬼青蛙在哪里有收录详情? [09/09/18]

-西湖区有多大? 西湖区是江西省南昌市市辖区。

-西湖区有多大? 为南昌市中心城区之一,有着2200多年历史,是一个物华天宝、人杰地灵的古老城区。

-西湖区有多大? 2004年南昌市老城区区划调整后,西湖区东起京九铁路线与青山湖区毗邻,南以洪城路东段、抚河路南段、象湖以及南隔堤为界与青云谱区、南昌县接壤,西凭赣江中心线与红谷滩新区交界,北沿中山路、北京西路与东湖区相连,所辖面积34.5平方公里,常住人口43万,管辖1个镇、10个街道办事处,设12个行政村、100个社区。

-西湖区有多大? (图)西湖区[南昌市]

-西湖区有多大? 西湖原为汉代豫章群古太湖的一部分,唐贞元15年(公元799年)洪恩桥的架设将东太湖分隔成东西两部分,洪恩桥以西谓之西湖,西湖区由此而得名。

-西湖区有多大? 西湖区在1926年南昌设市后分别称第四、五部分,六、七部分。

-西湖区有多大? 1949年解放初期分别称第三、四区。

-西湖区有多大? 1955年分别称抚河区、西湖区。

-西湖区有多大? 1980年两区合并称西湖区。[1]

-西湖区有多大? 辖:西湖街道、丁公路街道、广外街道、系马桩街道、绳金塔街道、朝阳洲街道、禾草街街道、十字街街道、瓦子角街道、三眼井街道、上海路街道、筷子巷街道、南站街道。[1]

-西湖区有多大? 2002年9月,由原筷子巷街道和原禾草街街道合并设立南浦街道,原广外街道与瓦子角街道的一部分合并设立广润门街道。

-西湖区有多大? 2002年12月1日设立桃源街道。

-西湖区有多大? 2004年区划调整前的西湖区区域:东与青山湖区湖坊乡插花接壤;西临赣江与红谷滩新区隔江相望;南以建设路为界,和青云谱区毗邻;北连中山路,北京西路,与东湖区交界。[1]

-西湖区有多大? 2002年9月,由原筷子巷街道和原禾草街街道合并设立南浦街道,原广外街道与瓦子角街道的一部分合并设立广润门街道。

-西湖区有多大? 2002年12月1日设立桃源街道。

-西湖区有多大? 2004年区划调整前的西湖区区域:东与青山湖区湖坊乡插花接壤;西临赣江与红谷滩新区隔江相望;南以建设路为界,和青云谱区毗邻;北连中山路,北京西路,与东湖区交界。

-西湖区有多大? 2004年9月7日,国务院批准(国函[2004]70号)调整南昌市市辖区部分行政区划:将西湖区朝阳洲街道的西船居委会划归东湖区管辖。

-西湖区有多大? 将青山湖区的桃花镇和湖坊镇的同盟村划归西湖区管辖。

-西湖区有多大? 将西湖区十字街街道的谷市街、洪城路、南关口、九四、新丰5个居委会,上海路街道的草珊瑚集团、南昌肠衣厂、电子计算机厂、江西涤纶厂、江地基础公司、曙光、商标彩印厂、南昌市染整厂、江南蓄电池厂、四机床厂、二进、国乐新村12个居委会,南站街道的解放西路东居委会划归青云谱区管辖。

-西湖区有多大? 将西湖区上海路街道的轻化所、洪钢、省人民检察院、电信城东分局、安康、省机械施工公司、省水利设计院、省安装公司、南方电动工具厂、江西橡胶厂、上海路北、南昌电池厂、东华计量所、南昌搪瓷厂、上海路新村、华安针织总厂、江西五金厂、三波电机厂、水文地质大队、二六○厂、省卫生学校、新世纪、上海路住宅区北、塔子桥北、南航、上海路住宅区南、沿河、南昌阀门厂28个居委会,丁公路街道的新魏路、半边街、师大南路、顺化门、岔道口东路、师大、广电厅、手表厂、鸿顺9个居委会,南站街道的工人新村北、工人新村南、商苑、洪都中大道、铁路第三、铁路第四、铁路第六7个居委会划归青山湖区管辖。

-西湖区有多大? 调整后,西湖区辖绳金塔、桃源、朝阳洲、广润门、南浦、西湖、系马桩、十字街、丁公路、南站10个街道和桃花镇,区人民政府驻孺子路。

-西湖区有多大? 调整前,西湖区面积31平方千米,人口52万。

-西湖区有多大? (图)西湖区[南昌市]

-西湖区有多大? 西湖区位于江西省省会南昌市的中心地带,具有广阔的发展空间和庞大的消费群体,商贸旅游、娱乐服务业等到各个行业都蕴藏着无限商机,投资前景十分广阔。

-西湖区有多大? 不仅水、电价格低廉,劳动力资源丰富,人均工资和房产价格都比沿海城市低,城区拥有良好的人居环境、低廉的投资成本,巨大的发展潜力。

-西湖区有多大? 105、316、320国道和京九铁路贯穿全境,把南北东西交通连成一线;民航可与上海、北京、广州、深圳、厦门、温州等到地通航,并开通了南昌-新加坡第一条国际航线;水运依托赣江可直达长江各港口;邮电通讯便捷,程控电话、数字微波、图文传真进入国际通讯网络;商检、海关、口岸等涉外机构齐全;水、电、气供应充足。

-西湖区有多大? (图)西湖区[南昌市]

-西湖区有多大? 西湖区,是江西省省会南昌市的中心城区,面积34.8平方公里,常住人口51.9万人,辖桃花镇、朝农管理处及10个街道,设13个行政村,116个社区居委会,20个家委会。[2]

-西湖区有多大? 2005年11月16日,南昌市《关于同意西湖区桃花镇、桃源、十字街街道办事处行政区划进行调整的批复》

-西湖区有多大? 1、同意将桃花镇的三道闸居委会划归桃源街道办事处管辖。

-青藏虎耳草花期什么时候? 青藏虎耳草多年生草本,高4-11.5厘米,丛生。

-青藏虎耳草花期什么时候? 花期7-8月。

-青藏虎耳草花期什么时候? 分布于甘肃(祁连山地)、青海(黄南、海南、海北)和西藏(加查)。

-青藏虎耳草花期什么时候? 生于海拔3 700-4 250米的林下、高山草甸和高山碎石隙。[1]

-青藏虎耳草花期什么时候? 多年生草本,高4-11.5厘米,丛生。

-青藏虎耳草花期什么时候? 茎不分枝,具褐色卷曲柔毛。

-青藏虎耳草花期什么时候? 基生叶具柄,叶片卵形、椭圆形至长圆形,长15-25毫米,宽4-8毫米,腹面无毛,背面和边缘具褐色卷曲柔毛,叶柄长1-3厘米,基部扩大,边缘具褐色卷曲柔毛;茎生叶卵形至椭圆形,长1.5-2厘米,向上渐变小。

-青藏虎耳草花期什么时候? 聚伞花序伞房状,具2-6花;花梗长5-19毫米,密被褐色卷曲柔毛;萼片在花期反曲,卵形至狭卵形,长2.5-4.2毫米,宽1.5-2毫米,先端钝,两面无毛,边缘具褐色卷曲柔毛,3-5脉于先端不汇合;花瓣腹面淡黄色且其中下部具红色斑点,背面紫红色,卵形、狭卵形至近长圆形,长2.5-5.2毫米,宽1.5-2.1毫米,先端钝,基部具长0.5-1毫米之爪,3-5(-7)脉,具2痂体;雄蕊长2-3.6毫米,花丝钻形;子房半下位,周围具环状花盘,花柱长1-1.5毫米。

-青藏虎耳草花期什么时候? 生于高山草甸、碎石间。

-青藏虎耳草花期什么时候? 分布青海、西藏、甘肃、四川等地。

-青藏虎耳草花期什么时候? [1]

-青藏虎耳草花期什么时候? 顶峰虎耳草Saxifraga cacuminum Harry Sm.

-青藏虎耳草花期什么时候? 对叶虎耳Saxifraga contraria Harry Sm.

-青藏虎耳草花期什么时候? 狭瓣虎耳草Saxifraga pseudohirculus Engl.

-青藏虎耳草花期什么时候? 唐古特虎耳草Saxifraga tangutica Engl.

-青藏虎耳草花期什么时候? 宽叶虎耳草(变种)Saxifraga tangutica Engl. var. platyphylla (Harry Sm.) J. T. Pan

-青藏虎耳草花期什么时候? 唐古特虎耳草(原变种)Saxifraga tangutica Engl. var. tangutica

-青藏虎耳草花期什么时候? 西藏虎耳草Saxifraga tibetica Losinsk.[1]

-青藏虎耳草花期什么时候? Saxifraga przewalskii Engl. in Bull. Acad. Sci. St. -Petersb. 29:115. 1883: Engl et Irmsch. in Bot. Jahrb. 48:580. f. 5E-H. 1912 et in Engl. Pflanzenr. 67(IV. 117): 107. f. 21 E-H. 1916; J. T. Pan in Acta Phytotax. Sin. 16(2): 16. 1978;中国高等植物图鉴补编2: 30. 1983; 西藏植物志 2: 483. 1985. [1]

-生产一支欧文冲锋枪需要多少钱? 欧文冲锋枪 Owen Gun 1945年,在新不列颠手持欧文冲锋枪的澳大利亚士兵 类型 冲锋枪 原产国 ?澳大利亚 服役记录 服役期间 1941年-1960年代 用户 参见使用国 参与战役 第二次世界大战 马来亚紧急状态 朝鲜战争 越南战争 1964年罗德西亚布什战争 生产历史 研发者 伊夫林·欧文(Evelyn Owen) 研发日期 1931年-1939年 生产商 约翰·莱萨特工厂 利特高轻武器工厂 单位制造费用 $ 30/枝 生产日期 1941年-1945年 制造数量 45,000-50,000 枝 衍生型 Mk 1/42 Mk 1/43 Mk 2/43 基本规格 总重 空枪: Mk 1/42:4.24 千克(9.35 磅) Mk 1/43:3.99 千克(8.8 磅) Mk 2/43:3.47 千克(7.65 磅) 全长 806 毫米(31.73 英吋) 枪管长度 247 毫米(9.72 英吋) 弹药 制式:9 × 19 毫米 原型:.38/200 原型:.45 ACP 口径 9 × 19 毫米:9 毫米(.357 英吋) .38/200:9.65 毫米(.38 英吋) .45 ACP:11.43 毫米(.45 英吋) 枪管 1 根,膛线7 条,右旋 枪机种类 直接反冲作用 开放式枪机 发射速率 理论射速: Mk 1/42:700 发/分钟 Mk 1/43:680 发/分钟 Mk 2/43:600 发/分钟 实际射速:120 发/分钟 枪口初速 380-420 米/秒(1,246.72-1,377.95 英尺/秒) 有效射程 瞄具装定射程:91.44 米(100 码) 最大有效射程:123 米(134.51 码) 最大射程 200 米(218.72 码) 供弹方式 32/33 发可拆卸式弹匣 瞄准具型式 机械瞄具:向右偏置的觇孔式照门和片状准星 欧文冲锋枪(英语:Owen Gun,正式名称:Owen Machine Carbine,以下简称为“欧文枪”)是一枝由伊夫林·(埃沃)·欧文(英语:Evelyn (Evo) Owen)于1939年研制、澳大利亚的首枝冲锋枪,制式型发射9 × 19 毫米鲁格手枪子弹。

-生产一支欧文冲锋枪需要多少钱? 欧文冲锋枪是澳大利亚唯一设计和主要服役的二战冲锋枪,并从1943年由澳大利亚陆军所使用,直到1960年代中期。

-生产一支欧文冲锋枪需要多少钱? 由新南威尔士州卧龙岗市出身的欧文枪发明者,伊夫林·欧文,在24岁时于1939年7月向悉尼维多利亚军营的澳大利亚陆军军械官员展示了他所设计的.22 LR口径“卡宾机枪”原型枪。

-生产一支欧文冲锋枪需要多少钱? 该枪却被澳大利亚陆军所拒绝,因为澳大利亚陆军在当时没有承认冲锋枪的价值。

-生产一支欧文冲锋枪需要多少钱? 随着战争的爆发,欧文加入了澳大利亚军队,并且成为一名列兵。

-生产一支欧文冲锋枪需要多少钱? 1940年9月,欧文的邻居,文森特·沃德尔(英语:Vincent Wardell),看到欧文家楼梯后面搁著一个麻布袋,里面放著一枝欧文枪的原型枪。

-生产一支欧文冲锋枪需要多少钱? 而文森特·沃德尔是坎布拉港的大型钢制品厂莱萨特公司的经理,他向欧文的父亲表明了他对其儿子的粗心大意感到痛心,但无论如何仍然解释了这款武器的历史。

-生产一支欧文冲锋枪需要多少钱? 沃德尔对欧文枪的简洁的设计留下了深刻的印象。

-生产一支欧文冲锋枪需要多少钱? 沃德尔安排欧文转调到陆军发明部(英语:Army Inventions Board),并重新开始在枪上的工作。

-生产一支欧文冲锋枪需要多少钱? 军队仍然持续地从负面角度查看该武器,但同时政府开始采取越来越有利的观点。

-生产一支欧文冲锋枪需要多少钱? 该欧文枪原型配备了装在顶部的弹鼓,后来让位给装在顶部的弹匣使用。

-生产一支欧文冲锋枪需要多少钱? 口径的选择亦花了一些时间去解决。

-生产一支欧文冲锋枪需要多少钱? 由于陆军有大批量的柯尔特.45 ACP子弹,它们决定欧文枪需要采用这种口径。

-生产一支欧文冲锋枪需要多少钱? 直到在1941年9月19日官方举办试验时,约翰·莱萨特工厂制成了9 毫米、.38/200和.45 ACP三种口径版本。

-生产一支欧文冲锋枪需要多少钱? 而从美、英进口的斯登冲锋枪和汤普森冲锋枪在试验中作为基准使用。

-生产一支欧文冲锋枪需要多少钱? 作为测试的一部分,所有的枪支都浸没在泥浆里,并以沙土覆盖,以模拟他们将会被使用时最恶劣的环境。

-生产一支欧文冲锋枪需要多少钱? 欧文枪是唯一在这测试中这样对待以后仍可正常操作的冲锋枪。

-生产一支欧文冲锋枪需要多少钱? 虽然测试表现出欧文枪具有比汤普森冲锋枪和司登冲锋枪更优秀的可靠性,陆军没有对其口径作出决定。

-生产一支欧文冲锋枪需要多少钱? 结果它在上级政府干预以后,陆军才下令9 毫米的衍生型为正式口径,并在1941年11月20日正式被澳大利亚陆军采用。

-生产一支欧文冲锋枪需要多少钱? 在欧文枪的寿命期间,其可靠性在澳大利亚部队中赢得了“军人的至爱”(英语:Digger's Darling)的绰号,亦有人传言它受到美军高度青睐。

-生产一支欧文冲锋枪需要多少钱? 欧文枪是在1942年开始正式由坎布拉港和纽卡斯尔的约翰·莱萨特工厂投入生产,在生产高峰期每个星期生产800 支。

-生产一支欧文冲锋枪需要多少钱? 1942年3月至1943年2月之间,莱萨特生产了28,000 枝欧文枪。

-生产一支欧文冲锋枪需要多少钱? 然而,最初的一批弹药类型竟然是错误的,以至10,000 枝欧文枪无法提供弹药。

-生产一支欧文冲锋枪需要多少钱? 政府再一次推翻军方的官僚主义作风??,并让弹药通过其最后的生产阶段,以及运送到当时在新几内亚与日军战斗的澳大利亚部队的手中。

-生产一支欧文冲锋枪需要多少钱? 在1941年至1945年间生产了约50,000 枝欧文枪。

-生产一支欧文冲锋枪需要多少钱? 在战争期间,欧文枪的平均生产成本为$ 30。[1]

-生产一支欧文冲锋枪需要多少钱? 虽然它是有点笨重,因为其可靠性,欧文枪在士兵当中变得非常流行。

-生产一支欧文冲锋枪需要多少钱? 它是如此成功,它也被新西兰、英国和美国订购。[2]

-生产一支欧文冲锋枪需要多少钱? 欧文枪后来也被澳大利亚部队在朝鲜战争和越南战争,[3]特别是步兵组的侦察兵。

-生产一支欧文冲锋枪需要多少钱? 这仍然是一枝制式的澳大利亚陆军武器,直到1960年代中期,它被F1冲锋枪所取代。

-第二届中国光伏摄影大赛因为什么政策而开始的? 光伏发电不仅是全球能源科技和产业发展的重要方向,也是我国具有国际竞争优势的战略性新兴产业,是我国保障能源安全、治理环境污染、应对气候变化的战略性选择。

-第二届中国光伏摄影大赛因为什么政策而开始的? 2013年7月以来,国家出台了《关于促进光伏产业健康发展的若干意见》等一系列政策,大力推进分布式光伏发电的应用,光伏发电有望走进千家万户,融入百姓民生。

-第二届中国光伏摄影大赛因为什么政策而开始的? 大赛主办方以此为契机,开启了“第二届中国光伏摄影大赛”的征程。

-悬赏任务有哪些类型? 悬赏任务,威客网站上一种任务模式,由雇主在威客网站发布任务,提供一定数额的赏金,以吸引威客们参与。

-悬赏任务有哪些类型? 悬赏任务数额一般在几十到几千不等,但也有几万甚至几十万的任务。

-悬赏任务有哪些类型? 主要以提交的作品的质量好坏作为中标标准,当然其中也带有雇主的主观喜好,中标人数较少,多为一个或几个,因此竞争激烈。

-悬赏任务有哪些类型? 大型悬赏任务赏金数额巨大,中标者也较多,但参与人也很多,对于身有一技之长的威客来讲,悬赏任务十分适合。

-悬赏任务有哪些类型? 悬赏任务的类型主要包括:设计类、文案类、取名类、网站类、编程类、推广类等等。

-悬赏任务有哪些类型? 每一类所适合的威客人群不同,报酬的多少也不同,比如设计类的报酬就比较高,一般都几百到几千,而推广类的计件任务报酬比较少,一般也就几块钱,但花费的时间很少,技术要求也很低。

-悬赏任务有哪些类型? 1.注册—登陆

-悬赏任务有哪些类型? 2.点击“我要发悬赏”—按照发布流程及提示提交任务要求。

-悬赏任务有哪些类型? 悬赏模式选择->网站托管赏金模式。

-悬赏任务有哪些类型? 威客网站客服稍后会跟发布者联系确认任务要求。

-悬赏任务有哪些类型? 3.没有问题之后就可以预付赏金进行任务发布。

-悬赏任务有哪些类型? 4.会员参与并提交稿件。

-悬赏任务有哪些类型? 5.发布者需要跟会员互动(每个提交稿件的会员都可以),解决问题,完善稿件,初步筛选稿件。

-悬赏任务有哪些类型? 6.任务发布期结束,进入选稿期(在筛选的稿件中选择最后满意的)

-悬赏任务有哪些类型? 7.发布者不满意现有稿件可选定一个会员修改至满意为止,或者加价延期重新开放任务进行征稿。

-悬赏任务有哪些类型? (重复第六步)没有问题后进入下一步。

-悬赏任务有哪些类型? 8:中标会员交源文件给发布者—发布者确认—任务结束—网站将赏金付给中标会员。

-悬赏任务有哪些类型? 1、任务发布者自由定价,自由确定悬赏时间,自由发布任务要求,自主确定中标会员和中标方案。

-悬赏任务有哪些类型? 2、任务发布者100%预付任务赏金,让竞标者坚信您的诚意和诚信。

-悬赏任务有哪些类型? 3、任务赏金分配原则:任务一经发布,网站收取20%发布费,中标会员获得赏金的80%。

-悬赏任务有哪些类型? 4、每个任务最终都会选定至少一个作品中标,至少一个竞标者获得赏金。

-悬赏任务有哪些类型? 5、任务发布者若未征集到满意作品,可以加价延期征集,也可让会员修改,会员也可以删除任务。

-悬赏任务有哪些类型? 6、任务发布者自己所在组织的任何人均不能以任何形式参加自己所发布的任务,一经发现则视为任务发布者委托威客网按照网站规则选稿。

-悬赏任务有哪些类型? 7、任务悬赏总金额低于100元(含100元)的任务,悬赏时间最多为7天。

-悬赏任务有哪些类型? 所有任务最长时间不超过30天(特殊任务除外),任务总金额不得低于50元。

-悬赏任务有哪些类型? 8、网赚类、注册类任务总金额不能低于300元人民币,计件任务每个稿件的平均单价不能低于1元人民币。

-悬赏任务有哪些类型? 9、延期任务只有3次加价机会,第1次加价不得低于任务金额的10%,第2次加价不得低于任务总金额的20%,第3次不得低于任务总金额的50%。

-悬赏任务有哪些类型? 每次延期不能超过15天,加价金额不低于50元,特殊任务可以适当加长。

-悬赏任务有哪些类型? 如果为计件任务,且不是网赚类任务,将免费延期,直至征集完规定数量的作品为止。

-悬赏任务有哪些类型? 10、如果威客以交接源文件要挟任务发布者,威客网将扣除威客相关信用值,并取消其中标资格,同时任务将免费延长相应的时间继续征集作品 。

-江湖令由哪些平台运营? 《江湖令》是以隋唐时期为背景的RPG角色扮演类网页游戏。

-江湖令由哪些平台运营? 集角色扮演、策略、冒险等多种游戏元素为一体,画面精美犹如客户端游戏,融合历史、江湖、武功、恩仇多种特色元素,是款不可多得的精品游戏大作。

-江湖令由哪些平台运营? 由ya247平台、91wan游戏平台、2918、4399游戏平台、37wan、6711、兄弟玩网页游戏平台,49you、Y8Y9平台、8090游戏等平台运营的,由07177游戏网发布媒体资讯的网页游戏。

-江湖令由哪些平台运营? 网页游戏《江湖令》由51游戏社区运营,是以隋唐时期为背景的RPG角色扮演类网页游戏。

-江湖令由哪些平台运营? 集角色扮演、策略、冒险等多种游戏元素为一体,画面精美犹如客户端游戏,融合历史、江湖、武功、恩仇多种特色元素,是款不可多得的精品游戏大作…

-江湖令由哪些平台运营? 背景故事:

-江湖令由哪些平台运营? 隋朝末年,隋炀帝暴政,天下民不聊生,义军四起。

-江湖令由哪些平台运营? 在这动荡的时代中,百姓生活苦不堪言,多少人流离失所,家破人亡。

-江湖令由哪些平台运营? 天下三大势力---飞羽营、上清宫、侠隐岛,也值此机会扩张势力,派出弟子出来行走江湖。

-江湖令由哪些平台运营? 你便是这些弟子中的普通一员,在这群雄并起的年代,你将如何选择自己的未来。

-江湖令由哪些平台运营? 所有的故事,便从瓦岗寨/江都大营开始……

-江湖令由哪些平台运营? 势力:

-江湖令由哪些平台运营? ①、飞羽营:【外功、根骨】

-江湖令由哪些平台运营? 南北朝时期,由北方政权创立的一个民间军事团体,经过多年的发展,逐渐成为江湖一大势力。

-江湖令由哪些平台运营? ②、上清宫:【外功、身法】

-江湖令由哪些平台运营? 道家圣地,宫中弟子讲求清静无为,以一种隐世的方式修炼,但身在此乱世,亦也不能独善其身。

-江湖令由哪些平台运营? ③、侠隐岛:【根骨、内力】

-江湖令由哪些平台运营? 位于偏远海岛上的一个世家,岛内弟子大多武功高强,但从不进入江湖行走,适逢乱世,现今岛主也决意作一翻作为。

-江湖令由哪些平台运营? 两大阵营:

-江湖令由哪些平台运营? 义军:隋唐末期,百姓生活苦不堪言,有多个有志之士组成义军,对抗当朝暴君,希望建立一个适合百姓安居乐业的天地。

-江湖令由哪些平台运营? 隋军:战争一起即天下打乱,隋军首先要镇压四起的义军,同时在内部慢慢改变现有的朝廷,让天下再次恢复到昔日的安定。

-江湖令由哪些平台运营? 一、宠物品质

-江湖令由哪些平台运营? 宠物的品质分为:灵兽,妖兽,仙兽,圣兽,神兽

-江湖令由哪些平台运营? 二、宠物获取途径

-江湖令由哪些平台运营? 完成任务奖励宠物(其他途径待定)。

-江湖令由哪些平台运营? 三、宠物融合

-江湖令由哪些平台运营? 1、在主界面下方的【宠/骑】按钮进入宠物界面,再点击【融合】即可进入融合界面进行融合,在融合界面可选择要融合的宠物进行融合

-江湖令由哪些平台运营? 2、融合后主宠的形态不变;

-江湖令由哪些平台运营? 3、融合后宠物的成长,品质,技能,经验,成长经验,等级都继承成长高的宠物;

-江湖令由哪些平台运营? 4、融合宠物技能冲突,则保留成长值高的宠物技能,如果不冲突则叠加在空余的技能位置。

-请问土耳其足球超级联赛是什么时候成立的? 土耳其足球超级联赛(土耳其文:Türkiye 1. Süper Futbol Ligi)是土耳其足球协会管理的职业足球联赛,通常简称“土超”,也是土耳其足球联赛中最高级别。

-请问土耳其足球超级联赛是什么时候成立的? 目前,土超联赛队伍共有18支。

-请问土耳其足球超级联赛是什么时候成立的? 土耳其足球超级联赛

-请问土耳其足球超级联赛是什么时候成立的? 运动项目 足球

-请问土耳其足球超级联赛是什么时候成立的? 成立年份 1959年

-请问土耳其足球超级联赛是什么时候成立的? 参赛队数 18队

-请问土耳其足球超级联赛是什么时候成立的? 国家 土耳其

-请问土耳其足球超级联赛是什么时候成立的? 现任冠军 费内巴切足球俱乐部(2010-2011)

-请问土耳其足球超级联赛是什么时候成立的? 夺冠最多队伍 费内巴切足球俱乐部(18次)

-请问土耳其足球超级联赛是什么时候成立的? 土耳其足球超级联赛(Türkiye 1. Süper Futbol Ligi)是土耳其足球协会管理的职业足球联赛,通常简称「土超」,也是土耳其足球联赛中最高级别。

-请问土耳其足球超级联赛是什么时候成立的? 土超联赛队伍共有18支。

-请问土耳其足球超级联赛是什么时候成立的? 土超联赛成立于1959年,成立之前土耳其国有多个地区性联赛。

-请问土耳其足球超级联赛是什么时候成立的? 土超联赛成立后便把各地方联赛制度统一起来。

-请问土耳其足球超级联赛是什么时候成立的? 一般土超联赛由八月开始至五月结束,12月至1月会有歇冬期。

-请问土耳其足球超级联赛是什么时候成立的? 十八支球队会互相对叠,各有主场和作客两部分,采计分制。

-请问土耳其足球超级联赛是什么时候成立的? 联赛枋最底的三支球队会降到土耳其足球甲级联赛作赛。

-请问土耳其足球超级联赛是什么时候成立的? 由2005-06年球季起,土超联赛的冠、亚军会取得参加欧洲联赛冠军杯的资格。

-请问土耳其足球超级联赛是什么时候成立的? 成立至今土超联赛乃由两支著名球会所垄断──加拉塔萨雷足球俱乐部和费内巴切足球俱乐部,截至2009-2010赛季,双方各赢得冠军均为17次。

-请问土耳其足球超级联赛是什么时候成立的? 土超联赛共有18支球队,采取双循环得分制,每场比赛胜方得3分,负方0分,平局双方各得1分。

-请问土耳其足球超级联赛是什么时候成立的? 如果两支球队积分相同,对战成绩好的排名靠前,其次按照净胜球来决定;如果有三支以上的球队分数相同,则按照以下标准来确定排名:1、几支队伍间对战的得分,2、几支队伍间对战的净胜球数,3、总净胜球数。

-请问土耳其足球超级联赛是什么时候成立的? 联赛第1名直接参加下个赛季冠军杯小组赛,第2名参加下个赛季冠军杯资格赛第三轮,第3名进入下个赛季欧洲联赛资格赛第三轮,第4名进入下个赛季欧洲联赛资格赛第二轮,最后三名降入下个赛季的土甲联赛。

-请问土耳其足球超级联赛是什么时候成立的? 该赛季的土耳其杯冠军可参加下个赛季欧洲联赛资格赛第四轮,如果冠军已获得冠军杯资格,则亚军可参加下个赛季欧洲联赛资格赛第四轮,否则名额递补给联赛。

-请问土耳其足球超级联赛是什么时候成立的? 2010年/2011年 费内巴切

-请问土耳其足球超级联赛是什么时候成立的? 2009年/2010年 布尔萨体育(又译贝莎)

-请问土耳其足球超级联赛是什么时候成立的? 2008年/2009年 贝西克塔斯

-请问土耳其足球超级联赛是什么时候成立的? 2007年/2008年 加拉塔萨雷

-请问土耳其足球超级联赛是什么时候成立的? 2006年/2007年 费内巴切

-请问土耳其足球超级联赛是什么时候成立的? 2005年/2006年 加拉塔沙雷

-请问土耳其足球超级联赛是什么时候成立的? 2004年/2005年 费内巴切(又译费伦巴治)

-请问土耳其足球超级联赛是什么时候成立的? 2003年/2004年 费内巴切

-cid 作Customer IDentity解时是什么意思? ? CID 是 Customer IDentity 的简称,简单来说就是手机的平台版本. CID紧跟IMEI存储在手机的OTP(One Time Programmable)芯片中. CID 后面的数字代表的是索尼爱立信手机软件保护版本号,新的CID不断被使用,以用来防止手机被非索尼爱立信官方的维修程序拿来解锁/刷机/篡改

-cid 作Customer IDentity解时是什么意思? ? CID 是 Customer IDentity 的简称,简单来说就是手机的平台版本. CID紧跟IMEI存储在手机的OTP(One Time Programmable)芯片中. CID 后面的数字代表的是索尼爱立信手机软件保护版本号,新的CID不断被使用,以用来防止手机被非索尼爱立信官方的维修程序拿来解锁/刷机/篡改

-cid 作Customer IDentity解时是什么意思? ? (英)刑事调查局,香港警察的重案组

-cid 作Customer IDentity解时是什么意思? ? Criminal Investigation Department

-cid 作Customer IDentity解时是什么意思? ? 佩枪:

-cid 作Customer IDentity解时是什么意思? ? 香港警察的CID(刑事侦缉队),各区重案组的探员装备短管点38左轮手枪,其特点是便于收藏,而且不容易卡壳,重量轻,其缺点是装弹量少,只有6发,而且换子弹较慢,威力也一般,如果碰上54式手枪或者M9手枪明显处于下风。

-cid 作Customer IDentity解时是什么意思? ? 香港警察的“刑事侦查”(Criminal Investigation Department)部门,早于1983年起已经不叫做C.I.D.的了,1983年香港警察队的重整架构,撤销了C.I.D. ( Criminal Investigation Dept.) “刑事侦缉处”,将“刑事侦查”部门归入去“行动处”内,是“行动处”内的一个分支部门,叫“刑事部”( Crime Wing )。

-cid 作Customer IDentity解时是什么意思? ? 再于90年代的一次警队重整架构,香港警队成立了新的「刑事及保安处」,再将“刑事侦查”部门归入目前的「刑事及保安处」的“处”级单位,是归入这个“处”下的一个部门,亦叫“刑事部” ( Crime Wing ),由一个助理警务处长(刑事)领导。

-cid 作Customer IDentity解时是什么意思? ? 但是时至今天,CID虽已经是一个老旧的名称,香港市民、甚至香港警察都是习惯性的沿用这个历史上的叫法 .

-cid 作Customer IDentity解时是什么意思? ? CID格式是美国Adobe公司发表的最新字库格式,它具有易扩充、速度快、兼容性好、简便、灵活等特点,已成为国内开发中文字库的热点,也为用户使用字库提供质量更好,数量更多的字体。

-cid 作Customer IDentity解时是什么意思? ? CID (Character identifier)就是字符识别码,在组成方式上分成CIDFont,CMap表两部分。

-cid 作Customer IDentity解时是什么意思? ? CIDFont文件即总字符集,包括了一种特定语言中所有常用的字符,把这些字符排序,它们在总字符集中排列的次序号就是各个字符的CID标识码(Index);CMap(Character Map)表即字符映像文件,将字符的编码(Code)映像到字符的CID标识码(Index)。

-cid 作Customer IDentity解时是什么意思? ? CID字库完全针对大字符集市场设计,其基本过程为:先根据Code,在CMap表查到Index,然后在CIDFont文件找到相应的字形数据。

-本町位于什么地方? 本条目记述台湾日治时期,各都市之本町。

-本町位于什么地方? 为台湾日治时期台北市之行政区,共分一~四丁目,在表町之西。

-本町位于什么地方? 以现在的位置来看,本町位于现台北市中正区的西北角,约位于忠孝西路一段往西至台北邮局东侧。

-本町位于什么地方? 再向南至开封街一段,沿此路线向西至开封街一段60号,顺60号到汉口街一段向东到现在华南银行总行附近画一条直线到衡阳路。

-本町位于什么地方? 再向东至重庆南路一段,由重庆南路一段回到原点这个范围内。

-本町位于什么地方? 另外,重庆南路一段在当时名为“本町通”。

-本町位于什么地方? 此地方自日治时期起,就是繁华的商业地区,当时也有三和银行、台北专卖分局、日本石油等重要商业机构。

-本町位于什么地方? 其中,专卖分局是战后二二八事件的主要起始点。

-本町位于什么地方? 台湾贮蓄银行(一丁目)

-本町位于什么地方? 三和银行(二丁目)

-本町位于什么地方? 专卖局台北分局(三丁目)

-本町位于什么地方? 日本石油(四丁目)

-本町位于什么地方? 为台湾日治时期台南市之行政区。

-本町位于什么地方? 范围包括清代旧街名枋桥头前、枋桥头后、鞋、草花、天公埕、竹仔、下大埕、帽仔、武馆、统领巷、大井头、内宫后、内南町。

-本町位于什么地方? 为清代台南城最繁华的区域。

-本町位于什么地方? 台南公会堂

-本町位于什么地方? 北极殿

-本町位于什么地方? 开基武庙

-本町位于什么地方? 町名改正

-本町位于什么地方? 这是一个与台湾相关的小作品。

-本町位于什么地方? 你可以通过编辑或修订扩充其内容。

-《行走的观点:埃及》的条形码是多少? 出版社: 上海社会科学院出版社; 第1版 (2006年5月1日)

-《行走的观点:埃及》的条形码是多少? 丛书名: 时代建筑视觉旅行丛书

-《行走的观点:埃及》的条形码是多少? 条形码: 9787806818640

-《行走的观点:埃及》的条形码是多少? 尺寸: 18 x 13.1 x 0.7 cm

-《行走的观点:埃及》的条形码是多少? 重量: 181 g

-《行走的观点:埃及》的条形码是多少? 漂浮在沙与海市蜃楼之上的金字塔曾经是否是你的一个梦。

-《行走的观点:埃及》的条形码是多少? 埃及,这片蕴蓄了5000年文明的土地,本书为你撩开它神秘的纱。

-《行走的观点:埃及》的条形码是多少? 诸神、金字塔、神庙、狮身人面像、法老、艳后吸引着我们的注意力;缠绵悱恻的象形文字、医学、雕刻等留给我们的文明,不断引发我们对古代文明的惊喜和赞叹。

-《行走的观点:埃及》的条形码是多少? 尼罗河畔的奇异之旅,数千年的古老文明,尽收在你的眼底……

-《行走的观点:埃及》的条形码是多少? 本书集历史、文化、地理等知识于一体,并以优美、流畅文笔,简明扼要地阐述了埃及的地理环境、政治经济、历史沿革、文化艺术,以大量富有艺术感染力的彩色照片,生动形象地展示了埃及最具特色的名胜古迹、风土人情和自然风光。

-《行走的观点:埃及》的条形码是多少? 古埃及历史

-老挝人民军的工兵部队有几个营? 老挝人民军前身为老挝爱国战线领导的“寮国战斗部队”(即“巴特寮”),始建于1949年1月20日,1965年10月改名为老挝人民解放军,1982年7月改称现名。

-老挝人民军的工兵部队有几个营? 最高领导机构是中央国防和治安委员会,朱马里·赛雅颂任主席,隆再·皮吉任国防部长。

-老挝人民军的工兵部队有几个营? 实行义务兵役制,服役期最少18个月。[1]

-老挝人民军的工兵部队有几个营? ?老挝军队在老挝社会中有较好的地位和保障,工资待遇比地方政府工作人员略高。

-老挝人民军的工兵部队有几个营? 武装部队总兵力约6万人,其中陆军约5万人,主力部队编为5个步兵师;空军2000多人;海军(内河巡逻部队)1000多人;部队机关院校5000人。[1]

-老挝人民军的工兵部队有几个营? 老挝人民军军旗

-老挝人民军的工兵部队有几个营? 1991年8月14日通过的《老挝人民民主共和国宪法》第11条规定:国家执行保卫国防和维护社会安宁的政策。

-老挝人民军的工兵部队有几个营? 全体公民和国防力量、治安力量必须发扬忠于祖国、忠于人民的精神,履行保卫革命成果、保卫人民生命财产及和平劳动的任务,积极参加国家建设事业。

-老挝人民军的工兵部队有几个营? 最高领导机构是中央国防和治安委员会。

-老挝人民军的工兵部队有几个营? 主席由老挝人民革命党中央委员会总书记兼任。

-老挝人民军的工兵部队有几个营? 老挝陆军成立最早,兵力最多,约有5万人。

-老挝人民军的工兵部队有几个营? 其中主力部队步兵师5个、7个独立团、30多个营、65个独立连。

-老挝人民军的工兵部队有几个营? 地方部队30余个营及县属部队。

-老挝人民军的工兵部队有几个营? 地面炮兵2个团,10多个营。

-老挝人民军的工兵部队有几个营? 高射炮兵1个团9个营。

-老挝人民军的工兵部队有几个营? 导弹部队2个营。

-老挝人民军的工兵部队有几个营? 装甲兵7个营。

-老挝人民军的工兵部队有几个营? 特工部队6个营。

-老挝人民军的工兵部队有几个营? 通讯部队9个营。

-老挝人民军的工兵部队有几个营? 工兵部队6个营。

-老挝人民军的工兵部队有几个营? 基建工程兵2个团13个营。

-老挝人民军的工兵部队有几个营? 运输部队7个营。

-老挝人民军的工兵部队有几个营? 陆军的装备基本是中国和前苏联援助的装备和部分从抗美战争中缴获的美式装备。

-老挝人民军的工兵部队有几个营? 老挝内河部队总兵力约1700人,装备有内河船艇110多艘,编成4个艇队。

-老挝人民军的工兵部队有几个营? 有芒宽、巴能、纳坎、他曲、南盖、巴色等8个基地。

-老挝人民军的工兵部队有几个营? 空军于1975年8月组建,现有2个团、11个飞行大队,总兵力约2000人。

-老挝人民军的工兵部队有几个营? 装备有各种飞机140架,其中主要由前苏联提供和从万象政权的皇家空军手中接管。

-老挝人民军的工兵部队有几个营? 随着军队建设质量的提高,老挝人民军对外军事合作步伐也日益扩大,近年来先后与俄罗斯、印度、马来西亚、越南、菲律宾等国拓展了军事交流与合作的内容。

-老挝人民军的工兵部队有几个营? 2003年1月,印度决定向老挝援助一批军事装备和物资,并承诺提供技术帮助。

-老挝人民军的工兵部队有几个营? 2003年6月,老挝向俄罗斯订购了一批新式防空武器;2003年4月,老挝与越南签署了越南帮助老挝培训军事指挥干部和特种部队以及完成军队通信系统改造等多项协议。

-《焚心之城》的主角是谁? 《焚心之城》[1] 为网络作家老子扛过枪创作的一部都市类小说,目前正在创世中文网连载中。

-《焚心之城》的主角是谁? 乡下大男孩薛城,是一个不甘于生活现状的混混,他混过、爱过、也深深地被伤害过。

-《焚心之城》的主角是谁? 本料此生当浑浑噩噩,拼搏街头。

-《焚心之城》的主角是谁? 高考的成绩却给了他一点渺茫的希望,二月后,大学如期吹响了他进城的号角。

-《焚心之城》的主角是谁? 繁华的都市,热血的人生,冷眼嘲笑中,他发誓再不做一个平常人!

-《焚心之城》的主角是谁? 江北小城,黑河大地,他要行走过的每一个角落都有他的传说。

-《焚心之城》的主角是谁? 扯出一面旗,拉一帮兄弟,做男人,就要多一份担当,活一口傲气。

-《焚心之城》的主角是谁? (日期截止到2014年10月23日凌晨)

-请问香港利丰集团是什么时候成立的? 香港利丰集团前身是广州的华资贸易 (1906 - 1949) ,利丰是香港历史最悠久的出口贸易商号之一。

-请问香港利丰集团是什么时候成立的? 于1906年,冯柏燎先生和李道明先生在广州创立了利丰贸易公司;是当时中国第一家华资的对外贸易出口商。

-请问香港利丰集团是什么时候成立的? 利丰于1906年创立,初时只从事瓷器及丝绸生意;一年之后,增添了其它的货品,包括竹器、藤器、玉石、象牙及其它手工艺品,包括烟花爆竹类别。

-请问香港利丰集团是什么时候成立的? 在早期的对外贸易,中国南方内河港因水深不足不能行驶远洋船,反之香港港口水深岸阔,占尽地利。

-请问香港利丰集团是什么时候成立的? 因此,在香港成立分公司的责任,落在冯柏燎先生的三子冯汉柱先生身上。

-请问香港利丰集团是什么时候成立的? 1937年12月28日,利丰(1937)有限公司正式在香港创立。

-请问香港利丰集团是什么时候成立的? 第二次世界大战期间,利丰暂停贸易业务。

-请问香港利丰集团是什么时候成立的? 1943年,随着创办人冯柏燎先生去世后,业务移交给冯氏家族第二代。

-请问香港利丰集团是什么时候成立的? 之后,向来不参与业务管理的合伙人李道明先生宣布退休,将所拥有的利丰股权全部卖给冯氏家族。

-请问香港利丰集团是什么时候成立的? 目前由哈佛冯家两兄弟William Fung , Victor Fung和CEO Bruce Rockowitz 管理。

-请问香港利丰集团是什么时候成立的? 截止到2012年,集团旗下有利亚﹝零售﹞有限公司、利和集团、利邦时装有限公司、利越时装有限公司、利丰贸易有限公司。

-请问香港利丰集团是什么时候成立的? 利亚(零售)连锁,业务包括大家所熟悉的:OK便利店、玩具〝反〞斗城和圣安娜饼屋;范围包括香港、台湾、新加坡、马来西亚、至中国大陆及东南亚其它市场逾600多家店

-请问香港利丰集团是什么时候成立的? 利和集团,IDS以专业物流服务为根基,为客户提供经销,物流,制造服务领域内的一系列服务项目。

-请问香港利丰集团是什么时候成立的? 业务网络覆盖大中华区,东盟,美国及英国,经营着90多个经销中心,在中国设有18个经销公司,10,000家现代经销门店。

-请问香港利丰集团是什么时候成立的? 利邦(上海)时装贸易有限公司为大中华区其中一家大型男士服装零售集团。

-请问香港利丰集团是什么时候成立的? 现在在中国大陆、香港、台湾和澳门收购经营11个包括Cerruti 1881,Gieves & Hawkes,Kent & curwen和D’urban 等中档到高档的男士服装品牌,全国有超过350间门店设于各一线城市之高级商场及百货公司。

-请问香港利丰集团是什么时候成立的? 利越(上海)服装商贸有限公司隶属于Branded Lifestyle,负责中国大陆地区LEO里奥(意大利)、GIBO捷宝(意大利)、UFFIZI古杰师(意大利)、OVVIO奥维路(意大利)、Roots绿适(加拿大,全球服装排名第四)品牌销售业务

-请问香港利丰集团是什么时候成立的? 利丰(贸易)1995年收购了英之杰采购服务,1999年收购太古贸易有限公司(Swire & Maclain) 和金巴莉有限公司(Camberley),2000年和2002年分别收购香港采购出口集团Colby Group及Janco Oversea Limited,大大扩张了在美国及欧洲的顾客群,自2008年经济危机起一直到现在,收购多家欧、美、印、非等地区的时尚品牌,如英国品牌Visage,仅2011年上半年6个月就完成26个品牌的收购。

-请问香港利丰集团是什么时候成立的? 2004年利丰与Levi Strauss & Co.签订特许经营协议

-请问香港利丰集团是什么时候成立的? 2005年利丰伙拍Daymon Worldwide为全球供应私有品牌和特许品牌

-请问香港利丰集团是什么时候成立的? 2006年收购Rossetti手袋业务及Oxford Womenswear Group 强化美国批发业务

-请问香港利丰集团是什么时候成立的? 2007年收购Tommy Hilfiher全球采购业务,收购CGroup、Peter Black International LTD、Regetta USA LLC和American Marketing Enterprice

-请问香港利丰集团是什么时候成立的? 2008年收购Kent&Curwen全球特许经营权,收购Van Zeeland,Inc和Miles Fashion Group

-请问香港利丰集团是什么时候成立的? 2009年收购加拿大休闲品牌Roots ,收购Wear Me Appearl,LLC。

-请问香港利丰集团是什么时候成立的? 与Hudson's Bay、Wolverine Worldwide Inc、Talbots、Liz Claiborne达成了采购协议

-请问香港利丰集团是什么时候成立的? 2010年收购Oxford apparel Visage Group LTD

-请问香港利丰集团是什么时候成立的? 2011年一月收购土耳其Modium、美国女性时尚Beyond Productions,三月收购贸易公司Celissa 、玩具公司Techno Source USA, Inc.、卡通品牌产品TVMania和法国著名时装一线品牌Cerruti 1881,五月收购Loyaltex Apparel Ltd.、女装Hampshire Designers和英国彩妆Collection 2000,六月收购家私贸易Exim Designs Co., Ltd.,七月收购家庭旅行产业Union Rich USA, LLC和设计公司Lloyd Textile Fashion Company Limited,八月收购童装Fishman & Tobin和Crimzon Rose,九月收购家私贸易True Innovations, LLC、日用品企业Midway Enterprises和Wonderful World。

-请问香港利丰集团是什么时候成立的? 十二月与USPA – U.S. Polo Association签署授权协议。

-请问香港利丰集团是什么时候成立的? 利丰的精神:积极进取,不断认识并争取有利于客户和自身进步的机会;以行动为主导,对客户、供应商及职工的需求作出快速的决定。

-请问香港利丰集团是什么时候成立的? 利丰的最终目标:在产品采购、销售、流转的各环节建立全球性队伍提供多元化服务,利丰成员有效合作,共达目标。

-如何使魔兽变种akt不被查杀? Trojan/PSW.Moshou.akt“魔兽”变种akt是“魔兽”木马家族的最新成员之一,采用Delphi 6.0-7.0编写,并经过加壳处理。

-如何使魔兽变种akt不被查杀? “魔兽”变种akt运行后,自我复制到被感染计算机的指定目录下。

-如何使魔兽变种akt不被查杀? 修改注册表,实现木马开机自动运行。

-如何使魔兽变种akt不被查杀? 自我注入到被感染计算机的“explorer.exe”、“notepad.exe”等用户级权限的进程中加载运行,隐藏自我,防止被查杀。

-如何使魔兽变种akt不被查杀? 在后台秘密监视用户打开的窗口标题,盗取网络游戏《魔兽世界》玩家的游戏帐号、游戏密码、角色等级、装备信息、金钱数量等信息,并在后台将窃取到的玩家信息发送到骇客指定的远程服务器上,致使玩家游戏帐号、装备物品、金钱等丢失,给游戏玩家造成非常大的损失。

-丙种球蛋白能预防什么病情? 丙种球蛋白预防传染性肝炎,预防麻疹等病毒性疾病感染,治疗先天性丙种球蛋白缺乏症 ,与抗生素合并使用,可提高对某些严重细菌性和病毒性疾病感染的疗效。

-丙种球蛋白能预防什么病情? 中文简称:“丙球”

-丙种球蛋白能预防什么病情? 英文名称:γ-globulin、gamma globulin

-丙种球蛋白能预防什么病情? 【别名】 免疫血清球蛋白,普通免疫球蛋白,人血丙种球蛋白,丙种球蛋白,静脉注射用人免疫球蛋白(pH4)

-丙种球蛋白能预防什么病情? 注:由于人血中的免疫球蛋白大多数为丙种球蛋白(γ-球蛋白),有时丙种球蛋白也被混称为“免疫球蛋白”(immunoglobulin) 。

-丙种球蛋白能预防什么病情? 冻干制剂应为白色或灰白色的疏松体,液体制剂和冻干制剂溶解后,溶液应为接近无色或淡黄色的澄明液体,微带乳光。

-丙种球蛋白能预防什么病情? 但不应含有异物或摇不散的沉淀。

-丙种球蛋白能预防什么病情? 注射丙种球蛋白是一种被动免疫疗法。

-丙种球蛋白能预防什么病情? 它是把免疫球蛋白内含有的大量抗体输给受者,使之从低或无免疫状态很快达到暂时免疫保护状态。

-丙种球蛋白能预防什么病情? 由于抗体与抗原相互作用起到直接中和毒素与杀死细菌和病毒。

-丙种球蛋白能预防什么病情? 因此免疫球蛋白制品对预防细菌、病毒性感染有一定的作用[1]。

-丙种球蛋白能预防什么病情? 人免疫球蛋白的生物半衰期为16~24天。

-丙种球蛋白能预防什么病情? 1、丙种球蛋白[2]含有健康人群血清所具有的各种抗体,因而有增强机体抵抗力以预防感染的作用。

-丙种球蛋白能预防什么病情? 2、主要治疗先天性丙种球蛋白缺乏症和免疫缺陷病

-丙种球蛋白能预防什么病情? 3、预防传染性肝炎,如甲型肝炎和乙型肝炎等。

-丙种球蛋白能预防什么病情? 4、用于麻疹、水痘、腮腺炎、带状疱疹等病毒感染和细菌感染的防治

-丙种球蛋白能预防什么病情? 5、也可用于哮喘、过敏性鼻炎、湿疹等内源性过敏性疾病。

-丙种球蛋白能预防什么病情? 6、与抗生素合并使用,可提高对某些严重细菌性和病毒性疾病感染的疗效。

-丙种球蛋白能预防什么病情? 7、川崎病,又称皮肤粘膜淋巴结综合征,常见于儿童,丙种球蛋白是主要的治疗药物。

-丙种球蛋白能预防什么病情? 1、对免疫球蛋白过敏或有其他严重过敏史者。

-丙种球蛋白能预防什么病情? 2、有IgA抗体的选择性IgA缺乏者。

-丙种球蛋白能预防什么病情? 3、发烧患者禁用或慎用。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (1997年9月1日浙江省第八届人民代表大会常务委员会第三十九次会议通过 1997年9月9日浙江省第八届人民代表大会常务委员会公告第六十九号公布自公布之日起施行)

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 为了保护人的生命和健康,发扬人道主义精神,促进社会发展与和平进步事业,根据《中华人民共和国红十字会法》,结合本省实际,制定本办法。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 本省县级以上按行政区域建立的红十字会,是中国红十字会的地方组织,是从事人道主义工作的社会救助团体,依法取得社会团体法人资格,设置工作机构,配备专职工作人员,依照《中国红十字会章程》独立自主地开展工作。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 全省性行业根据需要可以建立行业红十字会,配备专职或兼职工作人员。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 街道、乡(镇)、机关、团体、学校、企业、事业单位根据需要,可以依照《中国红十字会章程》建立红十字会的基层组织。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 上级红十字会指导下级红十字会的工作。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 县级以上地方红十字会指导所在行政区域行业红十字会和基层红十字会的工作。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 人民政府对红十字会给予支持和资助,保障红十字会依法履行职责,并对其活动进行监督;红十字会协助人民政府开展与其职责有关的活动。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 全社会都应当关心和支持红十字事业。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 本省公民和单位承认《中国红十字会章程》并缴纳会费的,可以自愿参加红十字会,成为红十字会的个人会员或团体会员。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 个人会员由本人申请,基层红十字会批准,发给会员证;团体会员由单位申请,县级以上红十字会批准,发给团体会员证。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 个人会员和团体会员应当遵守《中华人民共和国红十字会法》和《中国红十字会章程》,热心红十字事业,履行会员的义务,并享有会员的权利。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 县级以上红十字会理事会由会员代表大会民主选举产生。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 理事会民主选举产生会长和副会长;根据会长提名,决定秘书长、副秘书长人选。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 县级以上红十字会可以设名誉会长、名誉副会长和名誉理事,由同级红十字会理事会聘请。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 省、市(地)红十字会根据独立、平等、互相尊重的原则,发展同境外、国外地方红十字会和红新月会的友好往来和合作关系。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 红十字会履行下列职责:

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (一)宣传、贯彻《中华人民共和国红十字会法》和本办法;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (二)开展救灾的准备工作,筹措救灾款物;在自然灾害和突发事件中,对伤病人员和其他受害者进行救助;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (三)普及卫生救护和防病知识,进行初级卫生救护培训,对交通、电力、建筑、矿山等容易发生意外伤害的单位进行现场救护培训;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (四)组织群众参加现场救护;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (五)参与输血献血工作,推动无偿献血;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (六)开展红十字青少年活动;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (七)根据中国红十字会总会部署,参加国际人道主义救援工作;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (八)依照国际红十字和红新月运动的基本原则,完成同级人民政府和上级红十字会委托的有关事宜;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (九)《中华人民共和国红十宇会法》和《中国红十字会章程》规定的其他职责。

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? 第八条 红十字会经费的主要来源:

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (一)红十字会会员缴纳的会费;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (二)接受国内外组织和个人捐赠的款物;

-浙江省实施《中华人民共和国红十字会法》办法在浙江省第八届人民代表大会常务委员会第几次会议通过的? (三)红十字会的动产、不动产以及兴办社会福利事业和经济实体的收入;

-宝湖庭院绿化率多少? 建发·宝湖庭院位于银川市金凤区核心地带—正源南街与长城中路交汇处向东500米。

-宝湖庭院绿化率多少? 项目已于2012年4月开工建设,总占地约4.2万平方米,总建筑面积约11.2万平方米,容积率2.14,绿化率35%,预计可入住630户。

-宝湖庭院绿化率多少? “建发·宝湖庭院”是银川建发集团股份有限公司继“建发·宝湖湾”之后,在宝湖湖区的又一力作。

-宝湖庭院绿化率多少? 项目周边发展成熟,东有唐徕渠景观水道,西临银川市交通主干道正源街;南侧与宝湖湿地公园遥相呼应。

-宝湖庭院绿化率多少? “宝湖庭院”项目公共交通资源丰富:15路、21路、35路、38路、43路公交车贯穿银川市各地,出行便利。

-宝湖庭院绿化率多少? 距离新百良田购物广场约1公里,工人疗养院600米,宝湖公园1公里,唐徕渠景观水道500米。

-宝湖庭院绿化率多少? 项目位置优越,购物、餐饮、医疗、交通、休闲等生活资源丰富。[1]

-宝湖庭院绿化率多少? 建发·宝湖庭院建筑及景观设置传承建发一贯“简约、大气”的风格:搂间距宽广,确保每一座楼宇视野开阔通透。

-宝湖庭院绿化率多少? 楼宇位置错落有置,外立面设计大气沉稳别致。

-宝湖庭院绿化率多少? 项目内部休闲绿地、景观小品点缀其中,道路及停车系统设计合理,停车及通行条件便利。

-宝湖庭院绿化率多少? 社区会所、幼儿园、活动室、医疗服务中心等生活配套一应俱全。

-宝湖庭院绿化率多少? 行政区域:金凤区

-大月兔(中秋艺术作品)的作者还有哪些代表作? 大月兔是荷兰“大黄鸭”之父弗洛伦泰因·霍夫曼打造的大型装置艺术作品,该作品首次亮相于台湾桃园大园乡海军基地,为了迎接中秋节的到来;在展览期间,海军基地也首次对外开放。

-大月兔(中秋艺术作品)的作者还有哪些代表作? 霍夫曼觉得中国神话中捣杵的玉兔很有想象力,于是特别创作了“月兔”,这也是“月兔”新作第一次展出。[1]

-大月兔(中秋艺术作品)的作者还有哪些代表作? ?2014年9月15日因工人施工不慎,遭火烧毁。[2]

-大月兔(中秋艺术作品)的作者还有哪些代表作? “大月兔”外表采用的杜邦防水纸、会随风飘动,内部以木材加保丽龙框架支撑做成。

-大月兔(中秋艺术作品)的作者还有哪些代表作? 兔毛用防水纸做成,材质完全防水,不怕日晒雨淋。[3

-大月兔(中秋艺术作品)的作者还有哪些代表作? -4]

-大月兔(中秋艺术作品)的作者还有哪些代表作? 25米的“月兔”倚靠在机

-大月兔(中秋艺术作品)的作者还有哪些代表作? 堡上望着天空,像在思考又像赏月。

-大月兔(中秋艺术作品)的作者还有哪些代表作? 月兔斜躺在机堡上,意在思考生命、边做白日梦,编织自己的故事。[3]

-大月兔(中秋艺术作品)的作者还有哪些代表作? 台湾桃园大园乡海军基地也首度对外开放。

-大月兔(中秋艺术作品)的作者还有哪些代表作? 428公顷的海军基地中,地景艺术节使用约40公顷,展场包括过去军机机堡、跑道等,由于这处基地过去警备森严,不对外开放,这次结合地景艺术展出,也可一窥过去是黑猫中队基地的神秘面纱。

-大月兔(中秋艺术作品)的作者还有哪些代表作? 2014年9月2日,桃园县政府文化局举行“踩线团”,让

-大月兔(中秋艺术作品)的作者还有哪些代表作? 大月兔

-大月兔(中秋艺术作品)的作者还有哪些代表作? 各项地景艺术作品呈现在媒体眼中,虽然“月兔”仍在进行最后的细节赶工,但横躺在机堡上的“月兔”雏形已经完工。[5]

-大月兔(中秋艺术作品)的作者还有哪些代表作? “这么大”、“好可爱呦”是不少踩线团成员对“月兔”的直觉;尤其在蓝天的衬托及前方绿草的组合下,呈现犹如真实版的爱丽丝梦游仙境。[6]

-大月兔(中秋艺术作品)的作者还有哪些代表作? 霍夫曼的作品大月兔,“从平凡中,创作出不平凡的视觉”,创造出观赏者打从心中油然而生的幸福感,拉近观赏者的距离。[6]

-大月兔(中秋艺术作品)的作者还有哪些代表作? 2014年9月15日早

-大月兔(中秋艺术作品)的作者还有哪些代表作? 上,施工人员要将月兔拆解,搬离海军基地草皮时,疑施工拆除的卡车,在拆除过程,故障起火,起火的卡车不慎延烧到兔子,造成兔子起火燃烧,消防队员即刻抢救,白色的大月兔立即变成焦黑的火烧兔。[7]

-大月兔(中秋艺术作品)的作者还有哪些代表作? 桃园县府表示相当遗憾及难过,也不排除向包商求偿,也已将此事告知霍夫曼。[2]

-大月兔(中秋艺术作品)的作者还有哪些代表作? ?[8]

-大月兔(中秋艺术作品)的作者还有哪些代表作? 弗洛伦泰因·霍夫曼,荷兰艺术家,以在公共空间创作巨大造型

-大月兔(中秋艺术作品)的作者还有哪些代表作? 物的艺术项目见长。

-大月兔(中秋艺术作品)的作者还有哪些代表作? 代表作品包括“胖猴子”(2010年在巴西圣保罗展出)、“大黄兔”(2011年在瑞典厄勒布鲁展出)、粉红猫(2014年5月在上海亮相)、大黄鸭(Rubber Duck)、月兔等。

-英国耆卫保险公司有多少保险客户? 英国耆卫保险公司(Old Mutual plc)成立于1845年,一直在伦敦证券交易所(伦敦证券交易所:OML)作第一上市,也是全球排名第32位(按营业收入排名)的保险公司(人寿/健康)。

-英国耆卫保险公司有多少保险客户? 公司是全球财富500强公司之一,也是被列入英国金融时报100指数的金融服务集团之一。

-英国耆卫保险公司有多少保险客户? Old Mutual 是一家国际金融服务公司,拥有近320万个保险客户,240万个银行储户,270,000个短期保险客户以及700,000个信托客户

-英国耆卫保险公司有多少保险客户? 英国耆卫保险公司(Old Mutual)是一家国际金融服务公司,总部设在伦敦,主要为全球客户提供长期储蓄的解决方案、资产管理、短期保险和金融服务等,目前业务遍及全球34个国家。[1]

-英国耆卫保险公司有多少保险客户? 主要包括人寿保险,资产管理,银行等。

-英国耆卫保险公司有多少保险客户? 1845年,Old Mutual在好望角成立。

-英国耆卫保险公司有多少保险客户? 1870年,董事长Charles Bell设计了Old Mutual公司的标记。

-英国耆卫保险公司有多少保险客户? 1910年,南非从英联邦独立出来。

-英国耆卫保险公司有多少保险客户? Old Mutual的董事长John X. Merriman被选为国家总理。

-英国耆卫保险公司有多少保险客户? 1927年,Old Mutual在Harare成立它的第一个事务所。

-英国耆卫保险公司有多少保险客户? 1960年,Old Mutual在南非成立了Mutual Unit信托公司,用来管理公司的信托业务。

-英国耆卫保险公司有多少保险客户? 1970年,Old Mutual的收入超过100百万R。

-英国耆卫保险公司有多少保险客户? 1980年,Old Mutual成为南非第一大人寿保险公司,年收入达10亿R。

-英国耆卫保险公司有多少保险客户? 1991年,Old Mutual在美国财富周刊上评选的全球保险公司中名列第38位。

-英国耆卫保险公司有多少保险客户? 1995年,Old Mutual在美国波士顿建立投资顾问公司,同年、又在香港和Guernsey建立事务所。

-英国耆卫保险公司有多少保险客户? 作为一项加强与其母公司联系的举措,OMNIA公司(百慕大)荣幸的更名为Old Mutual 公司(百慕大) 。

-英国耆卫保险公司有多少保险客户? 这一新的名称和企业识别清晰地展示出公司成为其世界金融机构合作伙伴强有力支持的决心。

-英国耆卫保险公司有多少保险客户? 2003 年4月,该公司被Old Mutual plc公司收购,更名为Sage Life(百慕大)公司并闻名于世,公司为Old Mutual公司提供了一个新的销售渠道,补充了其现有的以美元计价的产品线和分销系统。

-英国耆卫保险公司有多少保险客户? 达到了一个重要里程碑是公司成功的一个例证: 2005年6月3日公司资产超过10亿美元成为公司的一个主要里程碑,也是公司成功的一个例证。

-英国耆卫保险公司有多少保险客户? Old Mutual (百慕大)为客户提供一系列的投资产品。

-英国耆卫保险公司有多少保险客户? 在其开放的结构下,客户除了能够参与由Old Mutual会员管理的方案外,还能够参与由一些世界顶尖投资机构提供的投资选择。

-英国耆卫保险公司有多少保险客户? 首席执行官John Clifford对此发表评论说:“过去的两年对于Old Mutual家族来说是稳固发展的两年,更名是迫在眉睫的事情。

-英国耆卫保险公司有多少保险客户? 通过采用其名字和形象上的相似,Old Mutual (百慕大)进一步强化了与母公司的联系。”

-英国耆卫保险公司有多少保险客户? Clifford补充道:“我相信Old Mutual全球品牌认可度和Old Mutual(百慕大)产品专业知识的结合将在未来的日子里进一步推动公司的成功。”

-英国耆卫保险公司有多少保险客户? 随着公司更名而来的是公司网站的全新改版,设计投资选择信息、陈述、销售方案、营销材料和公告板块。

-英国耆卫保险公司有多少保险客户? 在美国购买不到OMNIA投资产品,该产品也不向美国公民或居民以及百慕大居民提供。

-英国耆卫保险公司有多少保险客户? 这些产品不对任何要约未得到批准的区域中的任何人,以及进行此要约或询价为非法行为的个人构成要约或询价。

-英国耆卫保险公司有多少保险客户? 关于Old Mutual(百慕大)公司

-英国耆卫保险公司有多少保险客户? Old Mutual(百慕大)公司总部位于百慕大,公司面向非美国居民及公民以及非百慕大居民,通过遍布世界的各个市场的金融机构开发和销售保险和投资方案。

-英国耆卫保险公司有多少保险客户? 这些方案由Old Mutual(百慕大)公司直接做出,向投资者提供各种投资选择和战略,同时提供死亡和其他受益保证。

-谁知道北京的淡定哥做了什么? 尼日利亚足球队守门员恩耶马被封淡定哥,原因是2010年南非世界杯上1:2落后希腊队时,对方前锋已经突破到禁区,其仍头依门柱发呆,其从容淡定令人吃惊。

-谁知道北京的淡定哥做了什么? 淡定哥

-谁知道北京的淡定哥做了什么? 在2010年6月17日的世界杯赛场上,尼日利亚1比2不敌希腊队,但尼日利亚门将恩耶马(英文名:Vincent Enyeama)在赛场上的“淡定”表现令人惊奇。

-谁知道北京的淡定哥做了什么? 随后,网友将赛场照片发布于各大论坛,恩耶马迅速窜红,并被网友称为“淡定哥”。

-谁知道北京的淡定哥做了什么? 淡定哥

-谁知道北京的淡定哥做了什么? 从网友上传得照片中可以看到,“淡定哥”在面临对方前锋突袭至小禁区之时,还靠在球门柱上发呆,其“淡定”程度的确非一般人所能及。

-谁知道北京的淡定哥做了什么? 恩耶马是尼日利亚国家队的主力守门员,目前效力于以色列的特拉维夫哈普尔队。

-谁知道北京的淡定哥做了什么? 1999年,恩耶马在尼日利亚国内的伊波姆星队开始职业生涯,后辗转恩伊姆巴、Iwuanyanwu民族等队,从07年开始,他为特拉维夫效力。

-谁知道北京的淡定哥做了什么? 恩耶马的尼日利亚国脚生涯始于2002年,截至2010年1月底,他为国家队出场已超过50次。

-谁知道北京的淡定哥做了什么? 当地时间2011年1月4日,国际足球历史与统计协会(IFFHS)公布了2010年度世界最佳门将,恩耶马(尼日利亚,特拉维夫夏普尔)10票排第十一

-谁知道北京的淡定哥做了什么? 此词经国家语言资源监测与研究中心等机构专家审定入选2010年年度新词语,并收录到《中国语言生活状况报告》中。

-谁知道北京的淡定哥做了什么? 提示性释义:对遇事从容镇定、处变不惊的男性的戏称。

-谁知道北京的淡定哥做了什么? 例句:上海现“淡定哥”:百米外爆炸他仍专注垂钓(2010年10月20日腾讯网http://news.qq.com/a/20101020/000646.htm)

-谁知道北京的淡定哥做了什么? 2011年度新人物

-谁知道北京的淡定哥做了什么? 1、淡定哥(北京)

-谁知道北京的淡定哥做了什么? 7月24日傍晚,北京市出现大范围降雨天气,位于通州北苑路出现积水,公交车也难逃被淹。

-谁知道北京的淡定哥做了什么? 李欣摄图片来源:新华网一辆私家车深陷积水,车主索性盘坐在自己的汽车上抽烟等待救援。

-谁知道北京的淡定哥做了什么? 私家车主索性盘坐在自己的车上抽烟等待救援,被网友称“淡定哥”

-谁知道北京的淡定哥做了什么? 2、淡定哥——林峰

-谁知道北京的淡定哥做了什么? 在2011年7月23日的动车追尾事故中,绍兴人杨峰(@杨峰特快)在事故中失去了5位亲人:怀孕7个月的妻子、未出世的孩子、岳母、妻姐和外甥女,他的岳父也在事故中受伤正在治疗。

-谁知道北京的淡定哥做了什么? 他披麻戴孝出现在事故现场,要求将家人的死因弄个明白。

-谁知道北京的淡定哥做了什么? 但在第一轮谈判过后,表示:“请原谅我,如果我再坚持,我将失去我最后的第六个亲人。”

-谁知道北京的淡定哥做了什么? 如果他继续“纠缠”铁道部,他治疗中的岳父将会“被死亡”。

-谁知道北京的淡定哥做了什么? 很多博友就此批评杨峰,并讽刺其为“淡定哥”。

-071型船坞登陆舰的北约代号是什么? 071型船坞登陆舰(英语:Type 071 Amphibious Transport Dock,北约代号:Yuzhao-class,中文:玉昭级,或以首舰昆仑山号称之为昆仑山级船坞登陆舰),是中国人民解放军海军隶下的大型多功能两栖船坞登陆舰,可作为登陆艇的母舰,用以运送士兵、步兵战车、主战坦克等展开登陆作战,也可搭载两栖车辆,具备大型直升机起降甲板及操作设施。

-071型船坞登陆舰的北约代号是什么? 071型两栖登陆舰是中国首次建造的万吨级作战舰艇,亦为中国大型多功能两栖舰船的开山之作,也可以说是中国万吨级以上大型作战舰艇的试验之作,该舰的建造使中国海军的两栖舰船实力有了质的提升。

-071型船坞登陆舰的北约代号是什么? 在本世纪以前中国海军原有的两栖舰队以一

-071型船坞登陆舰的北约代号是什么? 早期071模型

-071型船坞登陆舰的北约代号是什么? 千至四千吨级登陆舰为主要骨干,这些舰艇吨位小、筹载量有限,直升机操作能力非常欠缺,舰上自卫武装普遍老旧,对于现代化两栖登陆作战可说有很多不足。

-071型船坞登陆舰的北约代号是什么? 为了应对新时期的国际国内形势,中国在本世纪初期紧急强化两栖作战能力,包括短时间内密集建造072、074系列登陆舰,同时也首度设计一种新型船坞登陆舰,型号为071。[1]

-071型船坞登陆舰的北约代号是什么? 在两栖作战行动中,这些舰只不得不采取最危险的

-071型船坞登陆舰的北约代号是什么? 舾装中的昆仑山号

-071型船坞登陆舰的北约代号是什么? 敌前登陆方式实施两栖作战行动,必须与敌人预定阻击力量进行面对面的战斗,在台湾地区或者亚洲其他国家的沿海,几乎没有可用而不设防的海滩登陆地带,并且各国或者地区的陆军在战时,可能会很快控制这些易于登陆的海难和港口,这样就限制住了中国海军两栖登陆部队的实际登陆作战能力。

-071型船坞登陆舰的北约代号是什么? 071型登陆舰正是为了更快和更多样化的登陆作战而开发的新型登陆舰艇。[2]

-071型船坞登陆舰的北约代号是什么? 071型两栖船坞登陆舰具有十分良好的整体隐身能力,

-071型船坞登陆舰的北约代号是什么? 071型概念图

-071型船坞登陆舰的北约代号是什么? 该舰外部线条简洁干练,而且舰体外形下部外倾、上部带有一定角度的内倾,从而形成雷达隐身性能良好的菱形横剖面。

-071型船坞登陆舰的北约代号是什么? 舰体为高干舷平甲板型,长宽比较小,舰身宽满,采用大飞剪型舰首及楔形舰尾,舰的上层建筑位于舰体中间部位,后部是大型直升机甲板,适航性能非常突出。

-071型船坞登陆舰的北约代号是什么? 顶甲板上各类电子设备和武器系统布局十分简洁干净,各系统的突出物很少。

-071型船坞登陆舰的北约代号是什么? 该舰的两座烟囱实行左右分布式设置在舰体两侧,既考虑了隐身特点,也十分新颖。[3]

-071型船坞登陆舰的北约代号是什么? 1号甲板及上层建筑物主要设置有指挥室、控

-071型船坞登陆舰的北约代号是什么? 舰尾俯视

-071型船坞登陆舰的北约代号是什么? 制舱、医疗救护舱及一些居住舱,其中医疗救护舱设置有完备的战场救护设施,可以在舰上为伤病员提供紧急手术和野战救护能力。

-071型船坞登陆舰的北约代号是什么? 2号甲板主要是舰员和部分登陆人员的居住舱、办公室及厨房。

-071型船坞登陆舰的北约代号是什么? 主甲板以下则是登陆舱,分前后两段,前段是装甲车辆储存舱,共两层,可以储存登陆装甲车辆和一些其它物资,在进出口处还设有一小型升降机,用于两层之间的移动装卸用。

-071型船坞登陆舰的北约代号是什么? 前段车辆储存舱外壁左右各设有一折叠式装载舱门,所有装载车辆在码头可通过该门直接装载或者登陆上岸。

-071型船坞登陆舰的北约代号是什么? 后段是一个巨型船坞登陆舱,总长约70米,主要用来停泊大小型气垫登陆艇、机械登陆艇或车辆人员登陆艇。[4]

-071型船坞登陆舰的北约代号是什么? 自卫武装方面,舰艏设有一门PJ-26型76mm舰炮(

-071型船坞登陆舰的北约代号是什么? 井冈山号舰首主炮

-071型船坞登陆舰的北约代号是什么? 俄罗斯AK-176M的中国仿制版,亦被054A采用) , 四具与052B/C相同的726-4 18联装干扰弹发射器分置于舰首两侧以及上层结构两侧,近迫防御则依赖四座布置于上层结构的AK-630 30mm防空机炮 。

-071型船坞登陆舰的北约代号是什么? 原本071模型的舰桥前方设有一座八联装海红-7短程防空导弹发射器,不过071首舰直到出海试航与2009年4月下旬的海上阅兵式中,都未装上此一武器。

-071型船坞登陆舰的北约代号是什么? 电子装备方面, 舰桥后方主桅杆顶配置一具363S型E/F频2D对空/平面搜索雷达 、一具Racal Decca RM-1290 I频导航雷达,后桅杆顶装备一具拥有球型外罩的364型(SR-64)X频2D对空/对海搜索雷达,此外还有一具LR-66C舰炮射控雷达、一具负责导引AK-630机炮的TR-47C型火炮射控雷达等。[5]

-071型船坞登陆舰的北约代号是什么? 071型自卫武装布置

-071型船坞登陆舰的北约代号是什么? 071首舰昆仑山号于2006年6月开

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 竹溪县人大常委会办公室:承担人民代表大会会议、常委会会议、主任会议和常委会党组会议(简称“四会”)的筹备和服务工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责常委会组成人员视察活动的联系服务工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 受主任会议委托,拟定有关议案草案。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承担常委会人事任免的具体工作,负责机关人事管理和离退休干部的管理与服务。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承担县人大机关的行政事务和后勤保障工作,负责机关的安全保卫、文电处理、档案、保密、文印工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承担县人大常委会同市人大常委会及乡镇人大的工作联系。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责信息反馈工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 了解宪法、法律、法规和本级人大及其常委会的决议、决定实施情况及常委会成员提出建议办理情况,及时向常委会和主任会议报告。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承担人大宣传工作,负责人大常委会会议宣传的组织和联系。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 组织协调各专门工作委员会开展工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承办上级交办的其他工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 办公室下设五个科,即秘书科、调研科、人事任免科、综合科、老干部科。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 教科文卫工作委员会:负责人大教科文卫工作的日常联系、督办、信息收集反馈和业务指导工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责教科文卫方面法律法规贯彻和人大工作情况的宣传、调研工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承担人大常委会教科文卫方面会议议题调查的组织联系和调研材料的起草工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承担教科文卫方面规范性备案文件的初审工作,侧重对教科文卫行政执法个案监督业务承办工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责常委会组成人员和人大代表对教科文卫工作方面检查、视察的组织联系工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承办上级交办的其他工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 代表工作委员会:负责与县人大代表和上级人大代表的联系、情况收集交流工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责《代表法》的宣传贯彻和贯彻实施情况的调查研究工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责县人大代表法律法规和人民代表大会制度知识学习的组织和指导工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责常委会主任、副主任和委员走访联系人大代表的组织、联系工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责组织人大系统的干部培训。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责乡镇人大主席团工作的联系和指导。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责人大代表建议、批评和意见办理工作的联系和督办落实。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责人大代表开展活动的组织、联系工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 承办上级交办的其他工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 财政经济工作委员会:负责人大财政经济工作的日常联系、督办、信息收集反馈和业务指导工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 负责财政经济方面法律法规贯彻和人大工作情况的宣传、调研工作。

-我很好奇竹溪县人大常委会财政经济工作委员会是负责做什么的? 对国民经济计划和财政预算编制情况进行初审。

-我想知道武汉常住人口有多少? 武汉,简称“汉”,湖北省省会。

-我想知道武汉常住人口有多少? 它是武昌、汉口、汉阳三镇统称。

-我想知道武汉常住人口有多少? 世界第三大河长江及其最长支流汉江横贯市区,将武汉一分为三,形成武昌、汉口、汉阳,三镇跨江鼎立的格局。

-我想知道武汉常住人口有多少? 唐朝诗人李白在此写下“黄鹤楼中吹玉笛,江城五月落梅花”,因此武汉自古又称“江城”。

-我想知道武汉常住人口有多少? 武汉是中国15个副省级城市之一,全国七大中心城市之一,全市常住人口858万人。

-我想知道武汉常住人口有多少? 华中地区最大都市,华中金融中心、交通中心、文化中心,长江中下游特大城市。

-我想知道武汉常住人口有多少? 武汉城市圈的中心城市。

-我想知道武汉常住人口有多少? [3]武昌、汉口、汉阳三地被俗称武汉三镇。

-我想知道武汉常住人口有多少? 武汉西与仙桃市、洪湖市相接,东与鄂州市、黄石市接壤,南与咸宁市相连,北与孝感市相接,形似一只自西向东的蝴蝶形状。

-我想知道武汉常住人口有多少? 在中国经济地理圈内,武汉处于优越的中心位置是中国地理上的“心脏”,故被称为“九省通衢”之地。

-我想知道武汉常住人口有多少? 武汉市历史悠久,古有夏汭、鄂渚之名。

-我想知道武汉常住人口有多少? 武汉地区考古发现的历史可以上溯距今6000年的新石器时代,其考古发现有东湖放鹰台遗址的含有稻壳的红烧土、石斧、石锛以及鱼叉。

-我想知道武汉常住人口有多少? 市郊黄陂区境内的盘龙城遗址是距今约3500年前的商朝方国宫城,是迄今中国发现及保存最完整的商代古城之一。

-我想知道武汉常住人口有多少? 现代武汉的城市起源,是东汉末年的位于今汉阳的卻月城、鲁山城,和在今武昌蛇山的夏口城。

-我想知道武汉常住人口有多少? 东汉末年,地方军阀刘表派黄祖为江夏太守,将郡治设在位于今汉阳龟山的卻月城中。

-我想知道武汉常住人口有多少? 卻月城是武汉市区内已知的最早城堡。

-我想知道武汉常住人口有多少? 223年,东吴孙权在武昌蛇山修筑夏口城,同时在城内的黄鹄矶上修筑了一座瞭望塔——黄鹤楼。

-我想知道武汉常住人口有多少? 苏轼在《前赤壁赋》中说的“西望夏口,东望武昌”中的夏口就是指武汉(而当时的武昌则是今天的鄂州)。

-我想知道武汉常住人口有多少? 南朝时,夏口扩建为郢州,成为郢州的治所。

-我想知道武汉常住人口有多少? 隋置江夏县和汉阳县,分别以武昌,汉阳为治所。

-我想知道武汉常住人口有多少? 唐时江夏和汉阳分别升为鄂州和沔州的州治,成为长江沿岸的商业重镇。

-我想知道武汉常住人口有多少? 江城之称亦始于隋唐。

-我想知道武汉常住人口有多少? 两宋时武昌属鄂州,汉阳汉口属汉阳郡。

-我想知道武汉常住人口有多少? 经过发掘,武汉出土了大量唐朝墓葬,在武昌马房山和岳家咀出土了灰陶四神砖以及灰陶十二生肖俑等。

-我想知道武汉常住人口有多少? 宋代武汉的制瓷业发达。

-我想知道武汉常住人口有多少? 在市郊江夏区梁子湖旁发现了宋代瓷窑群100多座,烧制的瓷器品种很多,釉色以青白瓷为主。

-我想知道武汉常住人口有多少? 南宋诗人陆游在经过武昌时,写下“市邑雄富,列肆繁错,城外南市亦数里,虽钱塘、建康不能过,隐然一大都会也”来描写武昌的繁华。

-我想知道武汉常住人口有多少? 南宋抗金将领岳飞驻防鄂州(今武昌)8年,在此兴师北伐。

-我想知道武汉常住人口有多少? 元世祖至元十八年(1281年),武昌成为湖广行省的省治。

-我想知道武汉常住人口有多少? 这是武汉第一次成为一级行政单位(相当于现代的省一级)的治所。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 列夫·达维多维奇,托洛茨基是联共(布)党内和第三国际时期反对派的领导人,托派"第四国际"的创始人和领导人。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 列夫·达维多维奇·托洛茨基

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 列夫·达维多维奇·托洛茨基(俄国与国际历史上最重要的无产阶级革命家之一,二十世纪国际共产主义运动中最具争议的、也是备受污蔑的左翼反对派领袖,他以对古典马克思主义“不断革命论”的独创性发展闻名于世,第三共产国际和第四国际的主要缔造者之一(第三国际前三次代表大会的宣言执笔人)。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 在1905年俄国革命中被工人群众推举为彼得堡苏维埃主席(而当时布尔什维克多数干部却还在讨论是否支持苏维埃,这些干部后来被赶回俄国的列宁痛击)。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 1917年革命托洛茨基率领“区联派”与列宁派联合,并再次被工人推举为彼得格勒苏维埃主席。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 对于十月革命这场20世纪最重大的社会革命,托洛茨基赢得了不朽的历史地位。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 后来成了托洛茨基死敌的斯大林,当时作为革命组织领导者之一却写道:“起义的一切实际组织工作是在彼得格勒苏维埃主席托洛茨基同志直接指挥之下完成的。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 我们可以确切地说,卫戍部队之迅速站在苏维埃方面来,革命军事委员会的工作之所以搞得这样好,党认为这首先要归功于托洛茨基同志。”

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? (值得一提的是,若干年后,当反托成为政治需要时,此类评价都从斯大林文章中删掉了。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? )甚至连后来狂热的斯大林派雅克·沙杜尔,当时却也写道:“托洛茨基在十月起义中居支配地位,是起义的钢铁灵魂。”

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? (苏汉诺夫《革命札记》第6卷P76。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? )不仅在起义中,而且在无产阶级政权的捍卫、巩固方面和国际共产主义革命方面,托洛茨基也作出了极其卓越的贡献(外交官-苏联国际革命政策的负责人、苏联红军缔造者以及共产国际缔造者)。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 革命后若干年里,托洛茨基与列宁的画像时常双双并列挂在一起;十月革命之后到列宁病逝之前,布尔什维克历次全国代表大会上,代表大会发言结束均高呼口号:“我们的领袖列宁和托洛茨基万岁!”

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 在欧美共运中托洛茨基的威望非常高。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 后人常常认为托洛茨基只是一个知识分子文人,实际上他文武双全,而且谙熟军事指挥艺术,并且亲临战场。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 正是他作为十月革命的最高军事领袖(在十月革命期间他与士兵一起在战壕里作战),并且在1918年缔造并指挥苏联红军,是一个杰出的军事家(列宁曾对朋友说,除了托洛茨基,谁还能给我迅速地造成一支上百万人的强大军队?

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? )。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 在内战期间,他甚至坐装甲列车冒着枪林弹雨亲临战场指挥作战,差点挨炸死;当反革命军队进攻彼得堡时,当时的彼得堡领导人季诺维也夫吓得半死,托洛茨基却从容不迫指挥作战。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 同时托洛茨基又是一个高明的外交家,他曾强硬地要求英国政府释放因反战宣传被囚禁在英国的俄国流亡革命者,否则就不许英国公民离开俄国,连英国政府方面都觉得此举无懈可击;他并且把居高临下的法国到访者当场轰出他的办公室(革命前法国一直是俄国的头号债主与政治操纵者),却彬彬有礼地欢迎前来缓和冲突的法国大使;而在十月革命前夕,他对工人代表议会质询的答复既保守了即将起义的军事秘密,又鼓舞了革命者的战斗意志,同时严格遵循现代民主与公开原则,这些政治答复被波兰人多伊彻誉为“外交辞令的杰作”(伊·多伊彻的托氏传记<先知三部曲·武装的先知>第九章P335,第十一章P390)。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 托洛茨基在国民经济管理与研究工作中颇有创造:是苏俄新经济政策的首先提议者以及社会主义计划经济的首先实践者。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 1928年斯大林迟迟开始的计划经济实验,是对1923年以托洛茨基为首的左翼反对派经济纲领的拙劣剽窃和粗暴翻版。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 因为统治者的政策迟到,使得新经济政策到1928年已产生了一个威胁政权生存的农村资产阶级,而苏俄工人阶级国家不得不强力解决——而且是不得不借助已蜕化为官僚集团的强力来解决冲突——结果导致了1929年到30年代初的大饥荒和对农民的大量冤枉错杀。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 另外,他还对文学理论有很高的造诣,其著作<文学与革命>甚至影响了整整一代的国际左翼知识分子(包括中国的鲁迅、王实味等人)。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 他在哈佛大学图书馆留下了100多卷的<托洛茨基全集>,其生动而真诚的自传和大量私人日记、信件,给人留下了研究人类生活各个方面的宝贵财富,更是追求社会进步与解放的历史道路上的重要知识库之一。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 托洛茨基1879年10月26日生于乌克兰赫尔松县富裕农民家庭,祖籍是犹太人。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 原姓布隆施泰因。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 1896年开始参加工人运动。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 1897年 ,参加建立南俄工人协会 ,反对沙皇专制制度。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 1898年 在尼古拉也夫组织工人团体,被流放至西伯利亚。

-列夫·达维多维奇·托洛茨基是什么时候开始参加工人运动的? 1902年秋以署名托洛茨基之假护照逃到伦敦,参加V.I.列宁、G.V.普列汉诺夫等人主编的<火星报>的工作。

-谁知道洞庭湖大桥有多长? 洞庭湖大桥,位于洞庭湖与长江交汇处,东接岳阳市区洞庭大道和107国道、京珠高速公路,西连省道306线,是国内目前最长的内河公路桥。

-谁知道洞庭湖大桥有多长? 路桥全长10173.82m,其中桥长5747.82m,桥宽20m,西双向四车道,是我国第一座三塔双索面斜拉大桥,亚洲首座不等高三塔双斜索面预应力混凝土漂浮体系斜拉桥。

-谁知道洞庭湖大桥有多长? 洞庭湖大桥是我国最长的内河公路桥,大桥横跨东洞庭湖区,全长10174.2米,主桥梁长5747.8米。

-谁知道洞庭湖大桥有多长? 大桥的通车使湘、鄂间公路干线大为畅通,并为洞庭湖区运输抗洪抢险物资提供了一条快速通道该桥设计先进,新颖,造型美观,各项技求指标先进,且为首次在国内特大型桥梁中采用主塔斜拉桥结构体系。

-谁知道洞庭湖大桥有多长? 洞庭湖大桥是湖区人民的造福桥,装点湘北门户的形象桥,对优化交通网络绪构,发展区域经济,保障防汛救灾,缩短鄂、豫、陕等省、市西部车辆南下的运距,拓展岳阳城区的主骨架,提升岳阳城市品位,增强城市辐射力,有着十分重要的意义。

-谁知道洞庭湖大桥有多长? 自1996年12月开工以来,共有10支施工队伍和两支监理队伍参与了大桥的建设。

-谁知道洞庭湖大桥有多长? 主桥桥面高52米(黄海),设计通航等级Ⅲ级。

-谁知道洞庭湖大桥有多长? 主桥桥型为不等高三塔、双索面空间索、全飘浮体系的预应力钢筋混凝土肋板梁式结构的斜拉桥,跨径为130+310+310+130米。

-谁知道洞庭湖大桥有多长? 索塔为双室宝石型断面,中塔高为125.684米,两边塔高为99.311米。

-谁知道洞庭湖大桥有多长? 三塔基础为3米和3.2米大直径钻孔灌注桩。

-谁知道洞庭湖大桥有多长? 引桥为连续梁桥,跨径20至50米,基础直径为1.8和2.5米钻孔灌注桩。

-谁知道洞庭湖大桥有多长? 该桥设计先进、新颖、造型美观,各项技求指标先进,且为首次在国内特大型桥梁中采用主塔斜拉桥结构体系,岳阳洞庭湖大桥是我国首次采用不等高三塔斜拉桥桥型的特大桥,设计先进,施工难度大位居亚洲之首,是湖南省桥梁界的一大科研项目。

-谁知道洞庭湖大桥有多长? 洞庭湖大桥设计为三塔斜拉桥,空间双斜面索,主梁采用前支点挂篮施工,并按各种工况模拟挂篮受力进行现场试验,获得了大量有关挂篮受力性能和实际刚度的计算参数,作为施工控制参数。

-谁知道洞庭湖大桥有多长? 利用组合式模型单元,推导了斜拉桥分离式双肋平板主梁的单元刚度矩阵,并进行了岳阳洞庭湖大桥的空间受力分析,结果表明此种单元精度满足工程要求,同时在施工工艺方面也积累了成功经验。

-谁知道洞庭湖大桥有多长? 洞庭湖大桥的通车使湘、鄂间公路干线大为畅通,并为洞庭湖区抗洪抢险物资运输提供了一条快速通道。

-谁知道洞庭湖大桥有多长? 湖大桥设计先进,造型美丽,科技含量高。

-谁知道洞庭湖大桥有多长? 洞庭大桥还是一道美丽的风景线,大桥沿岸风景与岳阳楼,君山岛、洞庭湖等风景名胜融为一体,交相辉映,成为世人了解岳阳的又一崭新窗口,也具有特别旅游资源。

-谁知道洞庭湖大桥有多长? 洞庭湖大桥多塔斜拉桥新技术研究荣获国家科学技术进步二等奖、湖南省科学技术进步一等奖,并获第五届詹天佑大奖。

-谁知道洞庭湖大桥有多长? 大桥在中国土木工程学会2004年第16届年会上入选首届《中国十佳桥梁》,名列斜拉桥第二位。

-谁知道洞庭湖大桥有多长? 2001年荣获湖南省建设厅优秀设计一等奖,省优秀勘察一等奖。

-谁知道洞庭湖大桥有多长? 2003年荣获国家优秀工程设计金奖, "十佳学术活动"奖。

-天气预报员的布景师是谁? 芝加哥天气预报员大卫(尼古拉斯·凯奇),被他的粉丝们热爱,也被诅咒--这些人在天气不好的时候会迁怒于他,而大部分时候,大卫都是在预报坏天气。

-天气预报员的布景师是谁? ?不过,这也没什么,当一家国家早间新闻节目叫他去面试的时候,大卫的事业似乎又将再创新高。

-天气预报员的布景师是谁? 芝加哥天气预报员大卫(尼古拉斯·凯奇),被他的粉丝们热爱,也被诅咒--这些人在天气不好的时候会迁怒于他,而大部分时候,大卫都是在预报坏天气。

-天气预报员的布景师是谁? 不过,这也没什么,当一家国家早间新闻节目叫他去面试的时候,大卫的事业似乎又将再创新高。

-天气预报员的布景师是谁? 在电视节目上,大卫永远微笑,自信而光鲜,就像每一个成功的电视人一样,说起收入,他也绝对不落人后。

-天气预报员的布景师是谁? 不过,大卫的个人生活可就不那么如意了。

-天气预报员的布景师是谁? 与妻子劳伦(霍普·戴维斯)的离婚一直让他痛苦;儿子迈克吸大麻上瘾,正在进行戒毒,可戒毒顾问却对迈克有着异样的感情;女儿雪莉则体重惊人,总是愁眉苦脸、孤独寂寞;大卫的父亲罗伯特(迈克尔·凯恩),一个世界著名的小说家,虽然罗伯特不想再让大卫觉得负担过重,可正是他的名声让大卫的一生都仿佛处在他的阴影之下,更何况,罗伯特就快重病死了。

-天气预报员的布景师是谁? 和妻子的离婚、父亲的疾病、和孩子之间完全不和谐的关系,都让大卫每天头疼,而每次当他越想控制局面,一切就越加复杂。

-天气预报员的布景师是谁? 然而就在最后人们再也不会向他扔快餐,或许是因为他总是背着弓箭在大街上走。

-天气预报员的布景师是谁? 最后,面对那份高额工作的接受意味着又一个新生活的开始。

-天气预报员的布景师是谁? 也许,生活就像天气,想怎么样就怎么样,完全不可预料。

-天气预报员的布景师是谁? 导 演:戈尔·维宾斯基 Gore Verbinski

-天气预报员的布景师是谁? 编 剧:Steve Conrad .....(written by)

-天气预报员的布景师是谁? 演 员:尼古拉斯·凯奇 Nicolas Cage .....David Spritz

-天气预报员的布景师是谁? 尼古拉斯·霍尔特 Nicholas Hoult .....Mike

-天气预报员的布景师是谁? 迈克尔·凯恩 Michael Caine .....Robert Spritzel

-天气预报员的布景师是谁? 杰蒙妮·德拉佩纳 Gemmenne de la Peña .....Shelly

-天气预报员的布景师是谁? 霍普·戴维斯 Hope Davis .....Noreen

-天气预报员的布景师是谁? 迈克尔·瑞斯玻利 Michael Rispoli .....Russ

-天气预报员的布景师是谁? 原创音乐:James S. Levine .....(co-composer) (as James Levine)

-天气预报员的布景师是谁? 汉斯·兹米尔 Hans Zimmer

-天气预报员的布景师是谁? 摄 影:Phedon Papamichael

-天气预报员的布景师是谁? 剪 辑:Craig Wood

-天气预报员的布景师是谁? 选角导演:Denise Chamian

-天气预报员的布景师是谁? 艺术指导:Tom Duffield

-天气预报员的布景师是谁? 美术设计:Patrick M. Sullivan Jr. .....(as Patrick Sullivan)

-天气预报员的布景师是谁? 布景师 :Rosemary Brandenburg

-天气预报员的布景师是谁? 服装设计:Penny Rose

-天气预报员的布景师是谁? 视觉特效:Charles Gibson

-天气预报员的布景师是谁? David Sosalla .....Pacific Title & Art Studio

-韩国国家男子足球队教练是谁? 韩国国家足球队,全名大韩民国足球国家代表队(???? ?? ?????),为韩国足球协会所于1928年成立,并于1948年加入国际足球协会。

-韩国国家男子足球队教练是谁? 韩国队自1986年世界杯开始,从未缺席任何一届决赛周。

-韩国国家男子足球队教练是谁? 在2002年世界杯,韩国在主场之利淘汰了葡萄牙、意大利及西班牙三支欧洲强队,最后夺得了殿军,是亚洲球队有史以来最好成绩。

-韩国国家男子足球队教练是谁? 在2010年世界杯,韩国也在首圈分组赛压倒希腊及尼日利亚出线次圈,再次晋身十六强,但以1-2败给乌拉圭出局。

-韩国国家男子足球队教练是谁? 北京时间2014年6月27日3时,巴西世界杯小组赛H组最后一轮赛事韩国对阵比利时,韩国队0-1不敌比利时,3场1平2负积1分垫底出局。

-韩国国家男子足球队教练是谁? 球队教练:洪明甫

-韩国国家男子足球队教练是谁? 韩国国家足球队,全名大韩民国足球国家代表队(韩国国家男子足球队???? ?? ?????),为韩国足球协会所于1928年成立,并于1948年加入国际足联。

-韩国国家男子足球队教练是谁? 韩国队是众多亚洲球队中,在世界杯表现最好,他们自1986年世界杯开始,从未缺席任何一届决赛周。

-韩国国家男子足球队教练是谁? 在2002年世界杯,韩国在主场之利淘汰了葡萄牙、意大利及西班牙三支欧洲强队,最后夺得了殿军,是亚洲球队有史以来最好成绩。

-韩国国家男子足球队教练是谁? 在2010年世界杯,韩国也在首圈分组赛压倒希腊及尼日利亚出线次圈,再次晋身十六强,但以1-2败给乌拉圭出局。

-韩国国家男子足球队教练是谁? 2014年世界杯外围赛,韩国在首轮分组赛以首名出线次轮分组赛,与伊朗、卡塔尔、乌兹别克以及黎巴嫩争逐两个直接出线决赛周资格,最后韩国仅以较佳的得失球差压倒乌兹别克,以小组次名取得2014年世界杯决赛周参赛资格,也是韩国连续八次晋身世界杯决赛周。

-韩国国家男子足球队教练是谁? 虽然韩国队在世界杯成绩为亚洲之冠,但在亚洲杯足球赛的成绩却远不及世界杯。

-韩国国家男子足球队教练是谁? 韩国只在首两届亚洲杯(1956年及1960年)夺冠,之后五十多年未能再度称霸亚洲杯,而自1992年更从未打入过决赛,与另一支东亚强队日本近二十年来四度在亚洲杯夺冠成强烈对比。[1]

-韩国国家男子足球队教练是谁? 人物简介

-韩国国家男子足球队教练是谁? 车范根(1953年5月22日-)曾是大韩民国有名的锋线选手,他被欧洲媒体喻为亚洲最佳输出球员之一,他也被认为是世界最佳足球员之一。

-韩国国家男子足球队教练是谁? 他被国际足球史料与数据协会评选为20世纪亚洲最佳球员。

-韩国国家男子足球队教练是谁? 他在85-86赛季是德甲的最有价值球员,直到1999年为止他都是德甲外国球员入球纪录保持者。

-韩国国家男子足球队教练是谁? 德国的球迷一直没办法正确说出他名字的发音,所以球车范根(左)迷都以炸弹车(Cha Boom)称呼他。

-韩国国家男子足球队教练是谁? 这也代表了他强大的禁区得分能力。

-韩国国家男子足球队教练是谁? 职业生涯

-韩国国家男子足球队教练是谁? 车范根生于大韩民国京畿道的华城市,他在1971年于韩国空军俱乐部开始了他的足球员生涯;同年他入选了韩国19岁以下国家足球队(U-19)。

-韩国国家男子足球队教练是谁? 隔年他就加入了韩国国家足球队,他是有史以来加入国家队最年轻的球员。

-韩国国家男子足球队教练是谁? 车范根在27岁时前往德国发展,当时德甲被认为是世界上最好的足球联赛。

-韩国国家男子足球队教练是谁? 他在1978年12月加入了达姆施塔特,不过他在那里只待了不到一年就转到当时的德甲巨人法兰克福。

-韩国国家男子足球队教练是谁? 车范根很快在新俱乐部立足,他帮助球队赢得79-80赛季的欧洲足协杯。

-韩国国家男子足球队教练是谁? 在那个赛季过后,他成为德甲薪水第三高的球员,不过在1981年对上勒沃库森的一场比赛上,他的膝盖严重受伤,几乎毁了他的足球生涯。

-韩国国家男子足球队教练是谁? 在1983年车范根转投勒沃库森;他在这取得很高的成就,他成为85-86赛季德甲的最有价值球员,并且在1988年帮助球队拿下欧洲足协杯,也是他个人第二个欧洲足协杯。

-韩国国家男子足球队教练是谁? 他在决赛对垒西班牙人扮演追平比分的关键角色,而球会才在点球大战上胜出。

-韩国国家男子足球队教练是谁? 车范根在1989年退休,他在308场的德甲比赛中进了98球,一度是德甲外国球员的入球纪录。

-韩国国家男子足球队教练是谁? 执教生涯

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 国立台湾科技大学,简称台湾科大、台科大或台科,是位于台湾台北市大安区的台湾第一所高等技职体系大专院校,现为台湾最知名的科技大学,校本部比邻国立台湾大学。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 该校已于2005年、2008年持续入选教育部的“发展国际一流大学及顶尖研究中心计划”。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? “国立”台湾工业技术学院成立于“民国”六十三年(1974)八月一日,为台湾地区第一所技术职业教育高等学府。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 建校之目的,在因应台湾地区经济与工业迅速发展之需求,以培养高级工程技术及管理人才为目标,同时建立完整之技术职业教育体系。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? “国立”台湾工业技术学院成立于“民国”六十三年(1974)八月一日,为台湾地区第一所技术职业教育高等学府。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 建校之目的,在因应台湾地区经济与工业迅速发展之需求,以培养高级工程技术及管理人才为目标,同时建立完整之技术职业教育体系。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 本校校地约44.5公顷,校本部位于台北市基隆路四段四十三号,。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 民国68年成立硕士班,民国71年成立博士班,现有大学部学生5,664人,研究生4,458人,专任教师451位。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 2001年在台湾地区教育部筹划之研究型大学(“国立”大学研究所基础教育重点改善计画)中,成为全台首批之9所大学之一 。

-国立台湾科技大学副教授自制的动画“立体悲剧”入选的“ACM SIGGRAPH”国际动画展还有什么别称? 自2005年更在“教育部”所推动“五年五百亿 顶尖大学”计划下,遴选为适合发展成“顶尖研究中心”的11所研究型大学之一。